Chatbots have been an essential tool in the support industry for quite some time. But have they truly delivered on the promise of seamless support experiences that businesses yearn for? Let’s dive into it through an illustrative scenario:

User: Hi, I’m looking to buy a new laptop, and I’d like to know if it’s compatible with the software I use for my design work.

Chatbot: Hello! Sure, I can help with that. What software are you using?

User: I use Adobe Creative Cloud.

Chatbot: Great choice! Adobe Creative Cloud should work on most laptops.

In the above conversation, the chatbot didn’t ask for specific details about the software version or laptop model and delivered generic responses. This lack of context and inability to provide detailed information by traditional chatbots often lead to user frustration.

Now, that pretty much answered my question in the first place, didn’t it?

Fortunately, the advent of advanced Large Language Models (LLMs) has dawned on a new era for chatbots. With their knack for understanding human language nuances, they have enabled chatbots to be more relevant, contextual, and intent-driven.

But, as with any technology, LLM-powered chatbots have certain limitations. They can sometimes hallucinate and generate inaccurate responses due to a limited grasp of context. But don’t worry, proven techniques such as prompting and temperature setting are here to curtail the situation. How you may ask?

I’ll provide you with all the insights in this blog post, while also introducing SUVA, our cutting-edge virtual assistant, illustrating how it raises these capabilities to new heights.

Significance of Prompting – Unleashing the Full Potential of Chatbots

Good Input = Good Output

Let’s accept it – chatbots cannot read your mind; they barely have context for the type of information you seek. This is where prompting comes into play. By crafting clear instructions or commands, you can guide your bot to produce more coherent and contextually relevant responses.

Here’s how prompting plays a crucial role in optimizing chatbot performance:

- Controlled Interactions: Prompting allows organizations to control the direction of the conversation, ensuring that the chatbot stays on topic and provides relevant information.

- Contextual Understanding: Prompts establish context by clarifying user queries or guiding the conversation. Without context, chatbots may misinterpret or provide irrelevant answers.

- Handling Complex Queries: Prompting helps break down the complex or multi-part query into manageable segments, allowing chatbots to address each part systematically.

- Error Handling: When users input incorrect or invalid information, prompts can be used to guide them toward the correct information or actions. This helps prevent misunderstandings and frustration.

- Learning and Adaptation: Chatbots can learn from prompts over time, improving their ability to handle similar queries in the future. This adaptive learning process empowers them to be more effective conversational agents.

- Personalization: Prompts, when including user-specific information, enable chatbots to generate personalized responses and recommendations.

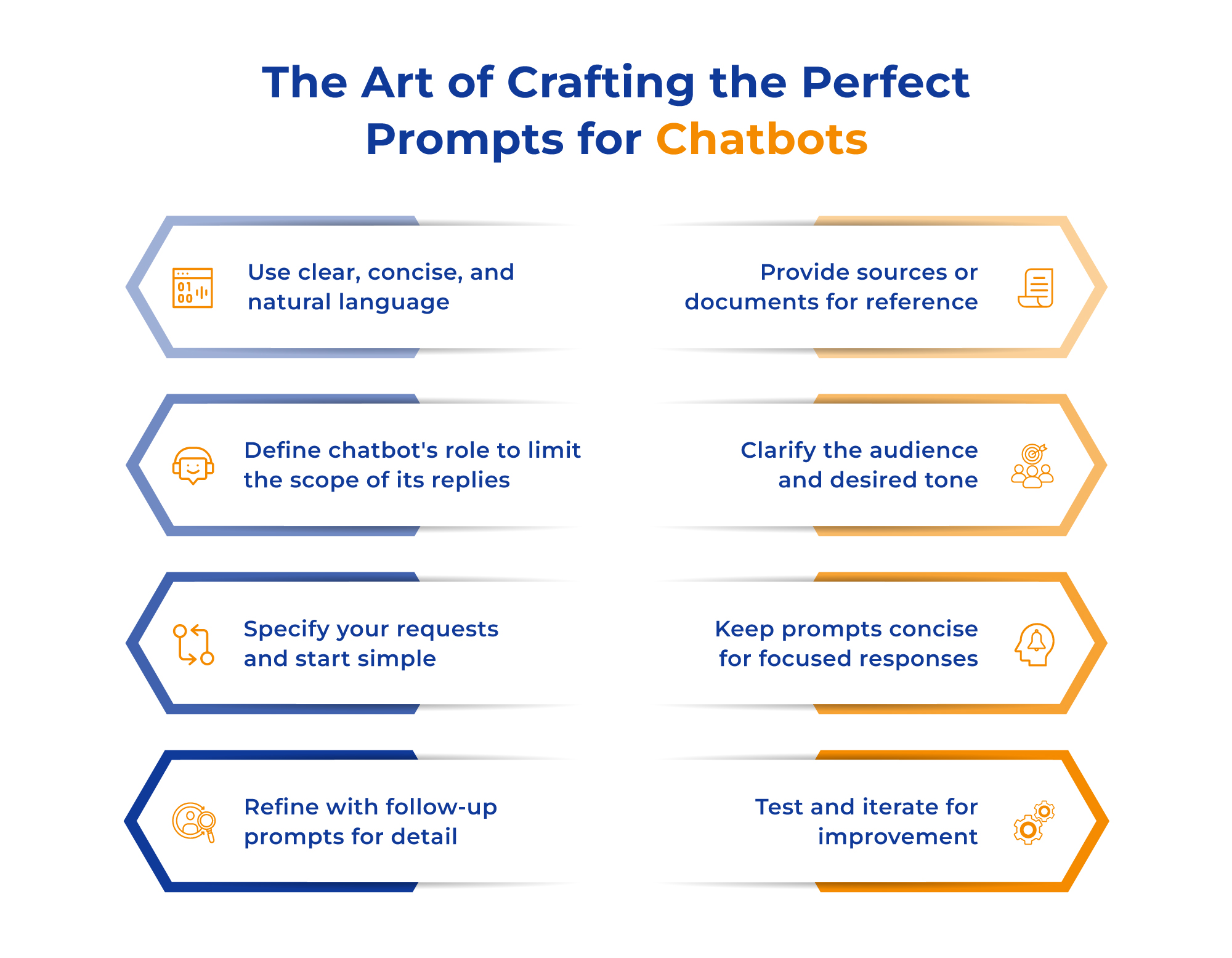

The Art of Crafting the Perfect Prompts for Chatbots

There is no one way of creating a seamless prompt, but considering a few simple steps can steer your chatbot toward generating accurate and relevant responses.

Optimizing Chatbot Output with Temperature Setting

Along with prompting, temperature setting is one of the most crucial parameters that impacts the performance of the chatbot. Mainly, it can be used to control the randomness or creativity in responses.

When generating responses from the chatbot, temperature can be set in conjunction with the prompt to control the level of similarity between the generated text and the prompt.

The scale for setting the temperature is between 0.1 – 1.0, with 0 causing the chatbot to produce more conservative text that is more similar to the prompt. On the other hand, a higher temperature value of 1.0 nudges the chatbot to come up with more creative and human-like responses, quite different from the prompt.

Let me give you a quick breakdown of how it happens:

Low Temperature = 0.1 – 0.3

User: I have a problem with my order. Can you help?

Chatbot: Certainly, I can assist you with your order. Please provide your order number, and I’ll look into it.

When using a low-temperature setting, chatbots tend to produce formal and straight-forward responses sticking closely to the user’s input. This setting is ideal for scenarios where you need accurate and concise answers to user queries.

Medium Temperature = 0.4 – 0.7

User: I have a problem with my order. Can you help?

Chatbot: Of course, I’m here to help you with your order. Can you please share your order number or any specific issue you’re facing?

At medium temperature, chatbots introduce some level of randomness and creativity into their responses while maintaining the formal tone. This setting is perfect for engaging conversations and human-like content generation.

High Temperature = 0.8 – 1.0

User: I have a problem with my order. Can you help?

Chatbot: I’m really sorry to hear that you’re having trouble with your order. Don’t worry; we’re here to assist you. Could you please provide your order number so we can get this sorted for you? We appreciate your patience!

High-temperature settings result in highly creative, diverse, and empathetic responses. It is suitable for scenarios where businesses aim to deliver a more personalized and emotionally engaging customer experience.

Please Note: The ideal temperature setting will vary depending on the specific task and the desired output. However, 0.2 can be a good starting point for many tasks.

If you are not sure what temperature setting to use, it is a good idea to experiment with different settings and see what works best for you.

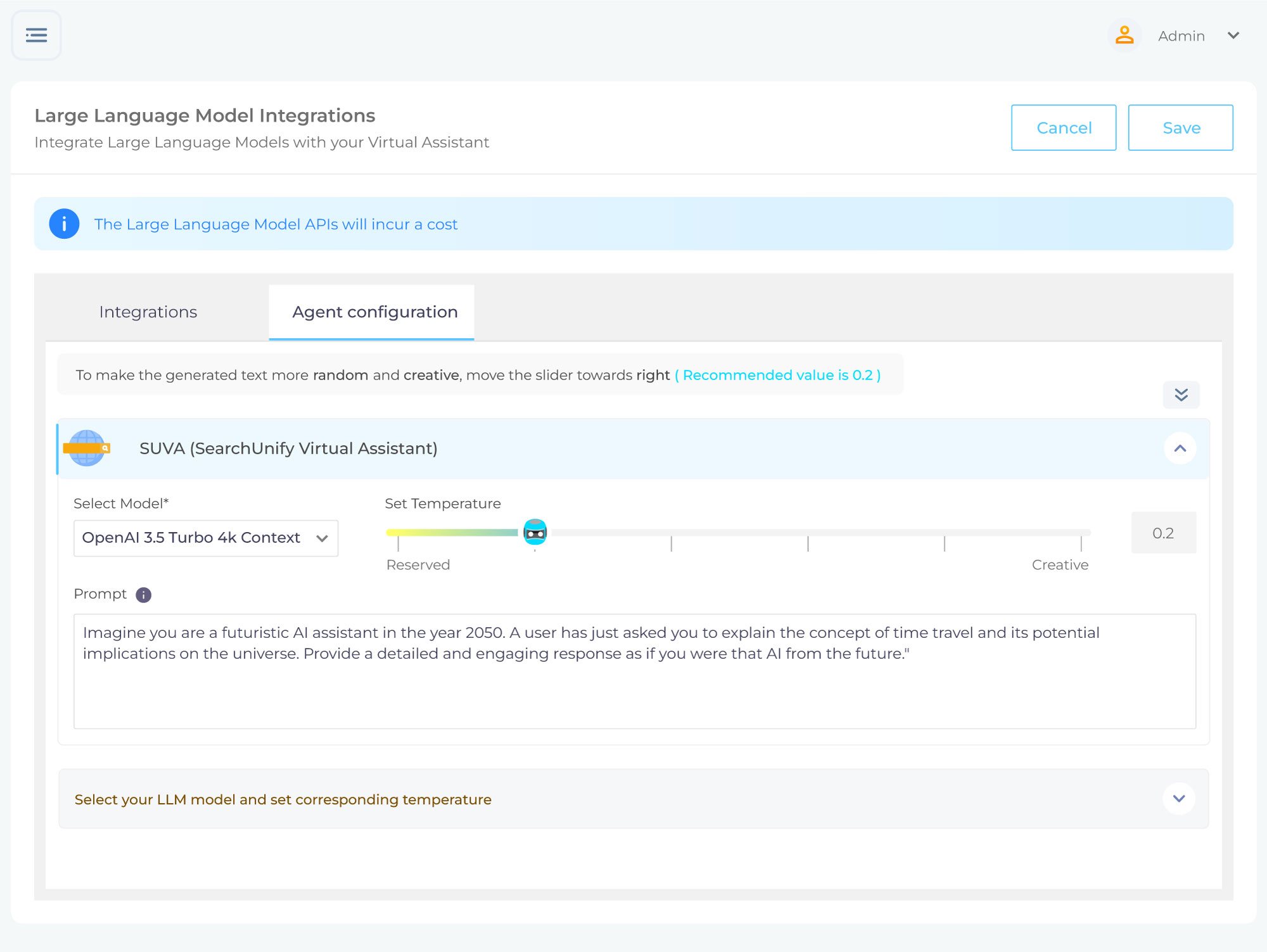

Introducing SUVA (SearchUnify Virtual Assistant) – The Triad of Precision, Creativity, and Context

SUVA (SearchUnify Virtual Assistant) is the world’s federated, information retrieval-augmented virtual assistant designed to develop fine-tuned, contextual, and intent-driven conversational experiences.

Let me give you a sneak peek of how it works:

When a user drops a query in the chatbox, SUVA springs into action, producing generative or direct answers based on the information available in different content sources, such as documents, video, audio, email, website, KBs, and more.

Now, you might be thinking, “Hey, doesn’t every LLM-powered chatbot do that?” Well, not quite. SUVA brings extra credibility to the table with its three special layers:

1. Prompting Layer

It plays a pivotal role in generating context from the results obtained from content sources. It serves to structure this context, which, in turn, enables SUVA to generate precise responses. In addition, it empowers the virtual assistant to be efficient in roleplaying or adapting to specific tasks for more relevant results.

2. Temperature Setting Layer

One size doesn’t fit all when it comes to responses. SUVA acknowledges this by allowing users to fine-tune the “temperature” of responses. Users can opt for lower temperatures for straightforward, fact-based answers or crank it up for a more creative and conversational tone. This degree of customization ensures that SUVA adapts to individual preferences, making it a truly user-centric tool.

Here’s a visual representation of how SUVA can respond differently under varying temperatures:

Temperature = 0.2

Temperature = 1.0

3. Moderation Layer

The moderation layer plays a critical role in ensuring the quality of interactions. It effectively filters out open-ended queries, malicious content, and any form of hateful language, ensuring a safe and productive conversational experience for users.

All this happens within the innovative SearchUnifyFRAG™ framework.

SearchUnifyFRAG™ (Federated Retrieval Augmented Generation) leverages the combination of three layers: Federation, Retrieval, and Augmented Generation to optimize the model for more context-aware, personalized, and efficient response generation. To learn more about this revolutionary approach, click here.

The Future of SUVA: What’s on the Horizon

While the innovative practices of prompting and temperature setting are already taking SUVA’s Large Language Model (LLM) capabilities to the next level, our journey doesn’t stop here. We are continually working on expanding SUVA’s horizons, ensuring it remains at the forefront of advanced virtual assistance. Here’s a glimpse of what’s in store:

- Fetching and posting data to the data warehouse

- Generating actionable insights on LLM usage

- Option to leverage the capabilities of LLMs to generate synthetic utterances to save time while creating a storyboard.

The transformative impact of SUVA on the support landscape is more than just talk; it’s backed by a rich tapestry of prestigious awards:

- 2023 Gold Stevie® Award in the Self-Service HR Solution – New or New-Version Category

- 2023 Customer Experience Innovation Award by CUSTOMER Magazine

- Winner in the 2022 Artificial Intelligence Excellence Awards

- 2021 AI TechAward for Best-of-Breed Chatbot

Ready to Redefine Your Conversational Experiences with SUVA?

As user expectations continue to evolve, the need to enhance LLM-powered chatbot solutions becomes increasingly clear. Effective mechanisms like prompting and temperature setting make it feasible to optimize your bot for contextual, precise, and creative responses.

However, if you’re looking to simplify this process, SUVA (SearchUnify Virtual Assistant) is the solution. With its built-in prompting and temperature-setting layers, it effortlessly facilitates coherent and engaging conversational experiences.

Interested in diving deeper? Stay tuned as I am soon to roll out the comprehensive webinar series, ‘SUVA Chronicles – A Deep Dive into the Next-generation Virtual Assistant’ along with my fellow companion, Ramkrishna Agrawal (Product Manager, SearchUnify). Together, we will explore the intricacies of SUVA and showcase the live demo of its fine-tuned LLM-powered capabilities.

Alternatively, you can request a demo to discover more about SUVA’s innovative features and proficiencies.