Managing customer queries day-to-day is no walk in the park—it’s not just about providing an answer but crafting a solution that uniquely resonates with each customer.

If you think about it, customer interactions unfold like dynamic dialogues, akin to a multi-hop journey where finding a resolution involves navigating through multiple steps or intermediate pieces of information.

Here’s the catch though: traditional search methods, like vector similarity search, excel at uncovering similar cases but stumble when it comes to linking different information pieces, especially in case of complex queries.

Enter the dynamic duo: Knowledge Graphs and Language Models (LLMs). They step in as the formidable solution to tackle the complexity of multi-hop queries. Let’s delve deeper into the blog post to know more.

First Things First, What are Multi-Hop Questions?

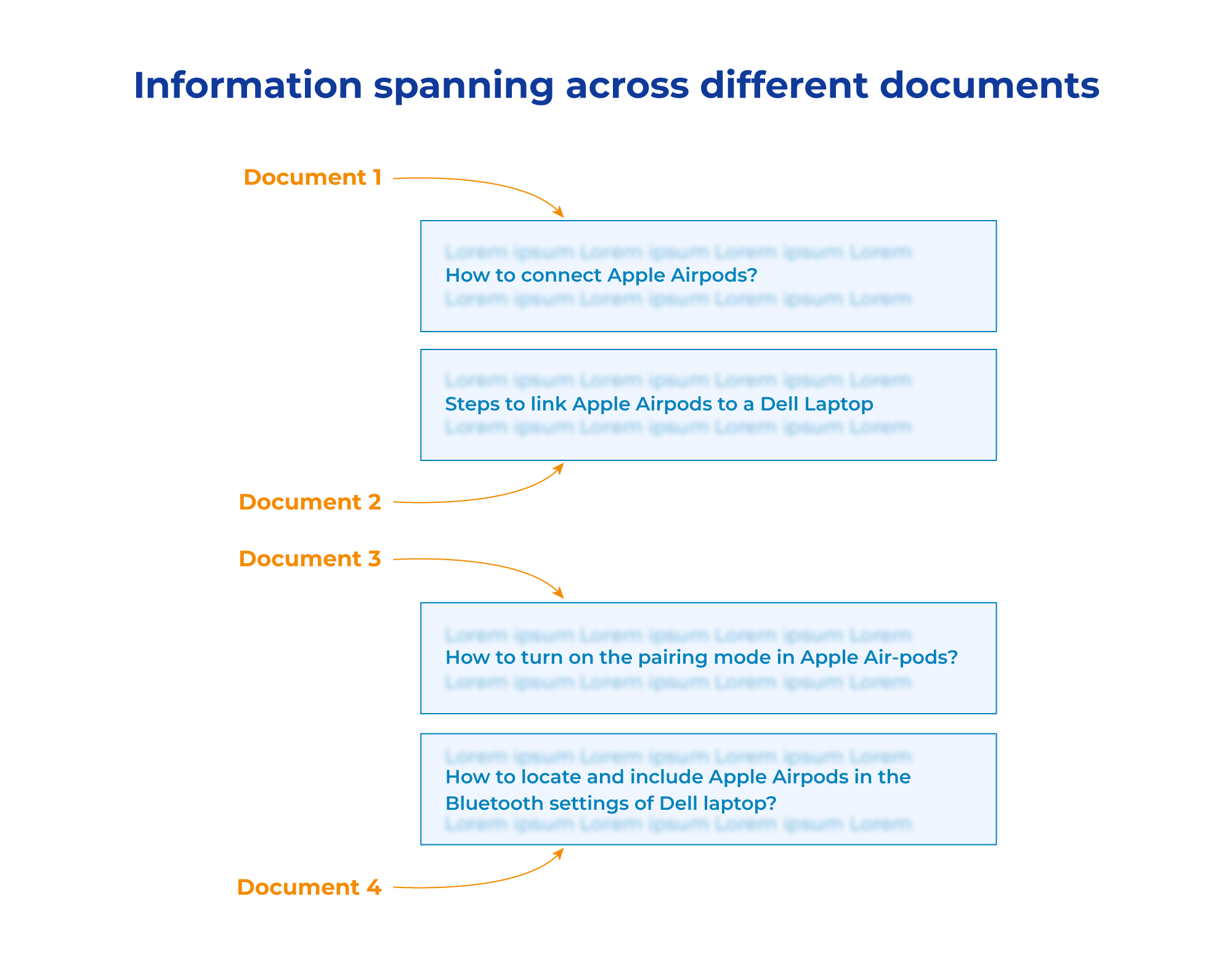

Multi-hop questions are inquiries that can’t be answered directly with a single piece of information or a simple search. Answering them involves navigating through multiple sources or layers of information, “hopping” between them to gather relevant details and provide a comprehensive response.

To shed light on the concept of multi-hop questions, let’s dive into a scenario familiar to anyone who’s navigated the labyrinth of customer support:

Imagine a customer named Sarah, facing a technical issue with a recently purchased gadget. Sarah contacted customer support with a seemingly simple question: “How do I connect my Apple AirPods to my Dell laptop:

Now, in the world of multi-hop questions, this is just the starting point.

Hop 1: What are the requirements for connecting Apple AirPods to a Dell laptop?

Hop 2: How do I put my Apple AirPods into pairing mode?

Hop 3: How do I find and add my Apple AirPods to my Dell laptop’s Bluetooth settings?

Then, much like skilled navigators, the support team starts connecting these dots—understanding the context, identifying potential solutions, and unraveling the intricacies of the issue. The process isn’t linear; it’s a dynamic exploration, akin to solving a puzzle with interconnected pieces.

This complexity calls for innovative solutions, and that’s where LLMs come into play, offering a transformative approach to streamline and enhance the efficiency of addressing multi-hop queries in customer support.

Addressing these queries involves a multi-hop question-answering approach. A singular question unfolds into multiple sub-questions, often necessitating numerous documents to be provided to the LLM for the generation of an accurate answer.

LLMs in Action: Bridging the Gap in Complex Queries

Large Language Model-based systems step in to tackle intricate questions, acting like super-smart assistants that understand the context and generate human-like text.

However, there’s a small hiccup though—at times, they “hallucinate,” providing information that isn’t entirely accurate. Despite their capabilities, LLMs highlight the complexity of handling knowledge; it’s not a one-tool job. Stay tuned for how we address this challenge in the next section!

The Role of Knowledge Graphs in Overcoming LLM Limitations

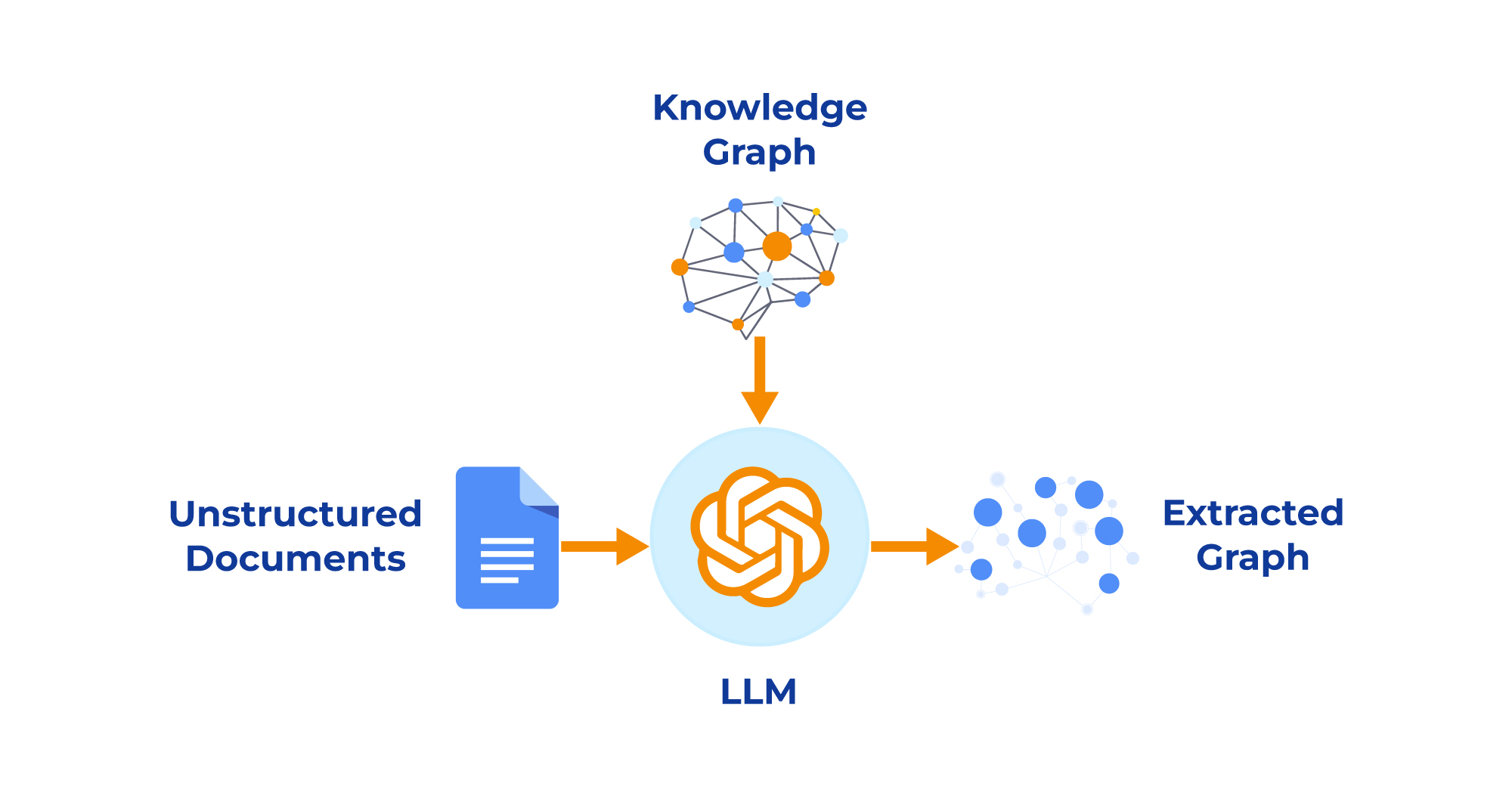

Did you know that integrating a knowledge base into an LLM tends to improve the output and reduce hallucinations?

Knowledge Graphs act as a reliable anchor for LLMs, providing a structured and verified knowledge base that helps counteract hallucinations by promoting accuracy, context-awareness, and logical reasoning.

- Grounding in Real-world Knowledge

Knowledge Graphs are structured repositories based on real-world information.

They provide a factual foundation that helps LLMs stay grounded in accurate and verified data. By referencing them, LLMs can cross-verify generated information, reducing the likelihood of hallucinations. - Contextual Understanding

Knowledge Graphs offer a contextual framework by connecting entities and their relationships. LLMs can leverage this context to generate responses that align with the logical structure of information present in them. This contextual understanding acts as a corrective measure, preventing LLMs from generating responses that deviate from established relationships. - Validation through Multi-hop Queries

Knowledge Graphs excel in handling multi-hop questions, requiring traversal through interconnected entities. LLMs can utilize them to validate their responses through a logical chain of thought flow. This process adds an extra layer of verification, reducing the risk of hallucinations by ensuring that responses align with the overall knowledge structure. - Human-like Reasoning

Knowledge Graphs facilitate a more human-like reasoning process.

LLMs can be guided by the structured information in them, enhancing their ability to reason and respond in a manner consistent with real-world logic. This guidance minimizes the chances of LLMs generating hallucinated or implausible content.

Ready to Optimize Information Retrieval with Knowledge Graphs and LLMs?

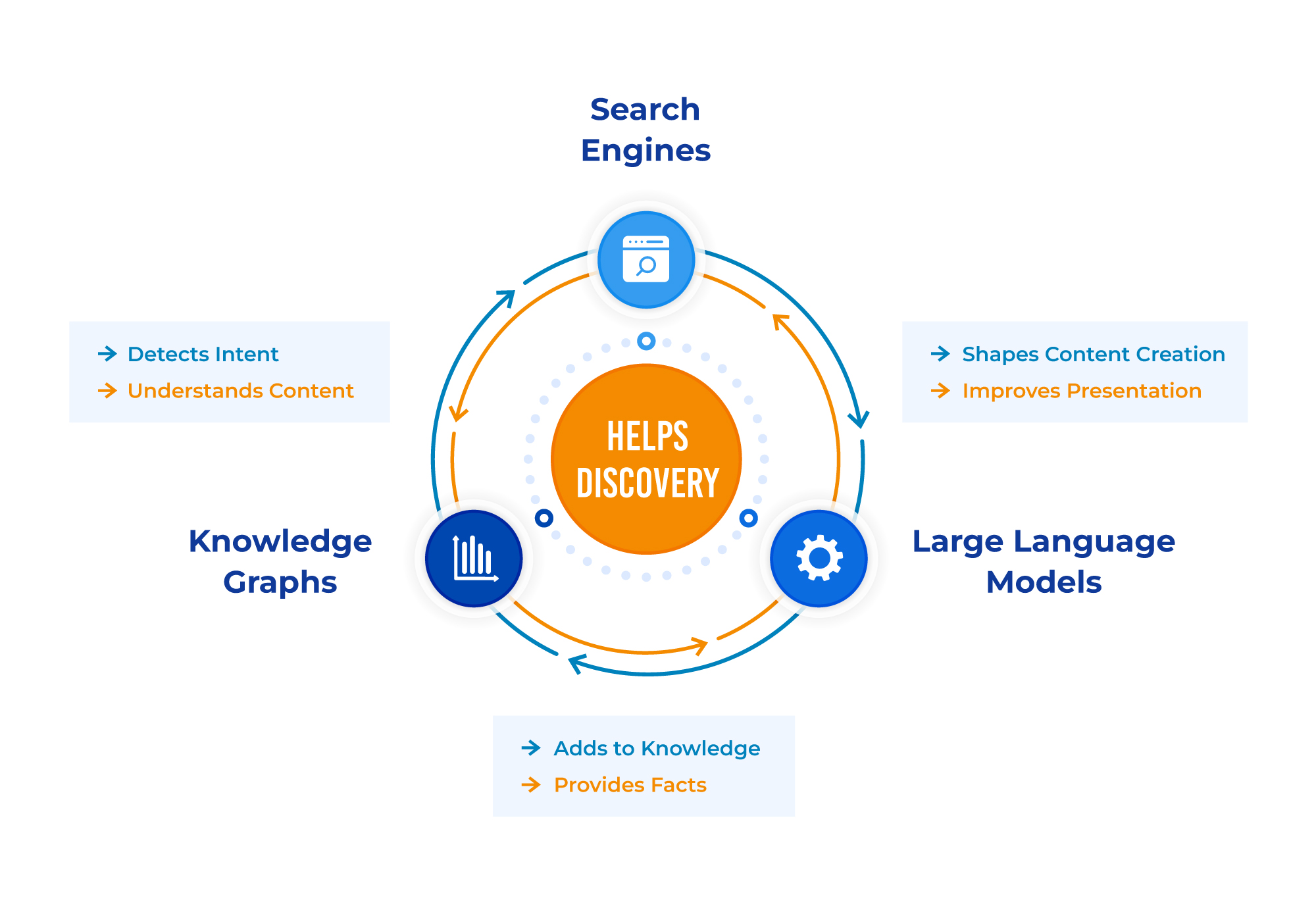

To conclude, Language Models and Knowledge Graphs combine their strengths to overcome the limitations of natural language processing and enhance information retrieval.

Curious about how SearchUnify tackles LLM shortcomings, making it a secure platform to harness this power? Request a demo now and discover firsthand how our unified cognitive platform transforms data into a strategic advantage!