Chatbots will become the primary customer support channel for roughly a quarter of organizations by 2027.

Let’s step into the world of chatbots, where decades of evolution have paved the way for a groundbreaking revolution. It all began with ELIZA, the very first chatbot, born in 1966, that left users spellbound with its human-like conversational simulation. Since then, these digital marvels have been steadily infiltrating diverse industries, particularly in the realm of customer support.

Behind the scenes, chatbots have harnessed the power of Artificial Intelligence (AI), Natural Language Processing (NLP), and Machine Learning (ML) to unravel the complexities of user queries and respond in the blink of an eye. But a new era has dawned with Large Language Models (LLMs) at the forefront, reshaping the chatbot landscape like never before.

In this blog post, we’ll delve into the evolution of chatbots, the transformative impact of LLMs, and the promising future that awaits chatbot technology.

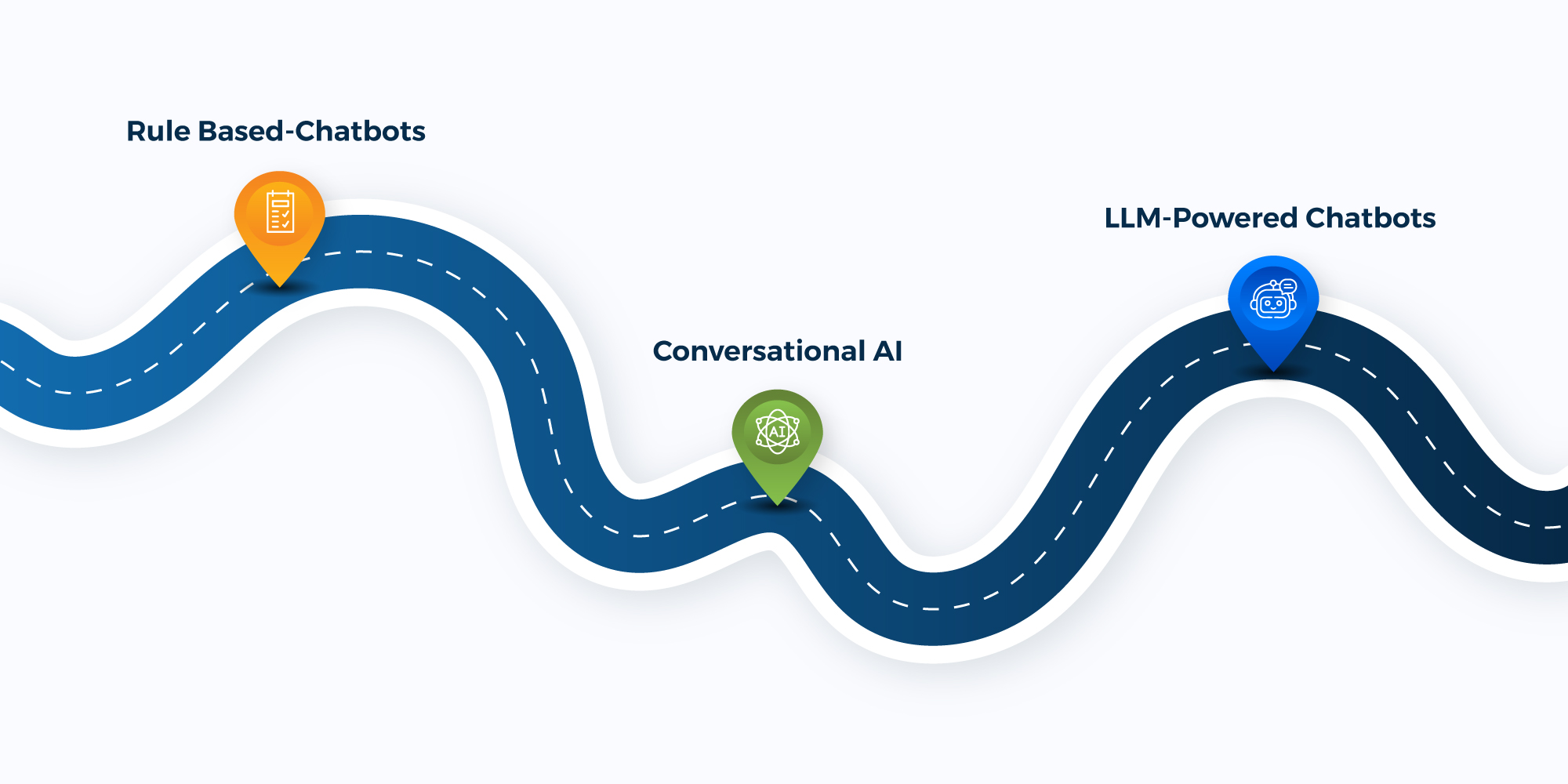

How Rule-Based Bots With Predefined Patterns Laid the Foundation

Did you know that the first generation of chatbots—ELIZA, Artificial Linguistic Internet Computer Entity (A.L.I.C.E.), and SmarterChild—operated on simple rule-based systems, delivering responses based on predefined patterns?

Though impressive for their time, these chatbots encountered challenges when faced with intricate queries due to their limited NLP capabilities.

Conversational AI Breaking the Boundaries

The significant advancement in AI led to the rise of Conversational AI in the early 2010s. It leveraged advanced NLP and ML algorithms to understand natural language more accurately than rule-based bots. It went beyond keyword matching and learn from past user interactions, thereby offering more personalized assistance.

However, the major problem with traditional AI-based chatbots is that they struggle to comprehend the intricacies of human language and maintain contextual awareness.

LLMs at the Forefront: Empowering Chatbots to Excel

LLMs, such as Open AI’s GPT-4 have truly propelled the chatbot popularity to new heights. Trained on a massive corpus of text data and patterns, they can generate human-like text and perform various NLP tasks with sheer precision.

When integrated with chatbots, LLMs can empower them to produce coherent and contextually relevant responses, which was not as feasible with traditional chatbots.

Let’s explore the key features that make LLM-powered chatbots stand out:

-

Natural Language Understanding (NLU): As already mentioned, LLMs possess exceptional capabilities in understanding the subtleties of human language. This empowers chatbots to accurately interpret user queries and deliver contextually appropriate responses.

Suppose a user asks an LLM-powered chatbot, “Can you recommend a good Mexican restaurant nearby?” By understanding the intent and cuisine preference, the chatbot will provide personalized suggestions for highly-rated Mexican restaurants in the user’s vicinity.

-

Text Generation: LLM-powered chatbots incorporate natural language patterns, grammar rules, and tones to produce personalized and engaging responses. The human touch helps build trust and rapport with users, making the conversation feel more natural and authentic.

-

Multilingual Support: LLMs enable chatbots to support multiple languages effortlessly. They can understand and respond to user queries in various languages, breaking down language barriers. As a result, businesses can cater to diverse customer bases and expand their reach to a global audience.

-

Sentiment Analysis: Based on their pre-training on a vast amount of text data, LLM-powered chatbots can analyze the sentiment of user statements, distinguishing between positive and negative expressions.

Let’s say a user states, “This product doesn’t work properly,” the LLM-powered chatbot can detect the negative sentiment in the statement. It can then generate a response that acknowledges the user’s concern and offers assistance, such as, “I’m sorry to hear that. Can you please provide more details so I can help resolve the issue?”

This distinctive power enables chatbots to understand user emotions more appropriately and provide a hyper-personalized support experience.

Is Relying Solely on LLMs Enough to Drive Business Success?

While LLMs have undoubtedly revolutionized the way chatbots operate, there are still some challenges that need to be addressed for businesses to fully leverage their potential and achieve their desired objectives. These include:

- Inaccuracies: LLMs can be susceptible to inaccuracies as they rely on training data sourced from the internet, which may not always provide definitive or correct answers. As an effect, chatbots may provide incorrect or misleading information.

- Bias: LLMs can unintentionally inherit biases present in their training data. For example, if the training data contains biased information from sources like newspapers or exhibits gender or ethnicity bias, the chatbot may inadvertently generate discriminatory responses.

- Hallucination: This phenomenon, known as “hallucination,” can occur when the model generates plausible-sounding but ultimately inaccurate or fictional information. This can undermine the trustworthiness and reliability of chatbot interactions.

- Outdated data: LLMs struggle to keep up with the latest information as their training data may not always capture the most up-to-date knowledge. That said, chatbots may sometimes deliver responses that are outdated or irrelevant to user queries, thereby affecting the quality of the support experience.

The Role of SearchUnifyFRAG™ in Enhancing LLMs

To address the limitations of Large Language Models, cutting-edge frameworks like Fragmented Retrieval Augmented Generation (SearchUnifyFRAGTM) have come into play.

SearchUnifyFRAGTM combines three key layers: Federation, Retrieval, and Augmented Generation, working in tandem to optimize chatbot performance and generate more contextually appropriate responses.

Federation Layer: The Federation layer focuses on enhancing user input by incorporating context retrieved from a comprehensive view of the enterprise knowledge base. Leveraging a 360-degree perspective, it facilitates the generation of responses that are not only accurate but also enriched with relevant factual content.

Retrieval Layer: The Retrieval layer plays a crucial role in accessing the most pertinent responses from a predefined set of knowledge with methods such as keyword matching, semantic similarity, and advanced retrieval algorithms. By retrieving the most relevant information, chatbots powered by FRAG can provide more accurate and contextually appropriate responses to user queries.

Augmented Generation Layer: The Augmented Generation layer emphasizes producing human-like responses or outputs based on the retrieved information or context. This is achieved using advanced techniques such as language modeling or neural networks.

Unleash the Next Level Chatbot Experience with SearchUnifyFRAGTM!

FRAG when integrated with LLM-powered chatbot can help drive more accurate and contextually relevant support experience. If you want to dive deeper, we invite you to join Vishal Sharma, CTO of SearchUnify, and Alan Pelz Sharpe, Founder & Principal Analyst, Deep Analysis for the upcoming webinar “Context Matters: Amplify Your Chatbot’s Potential with LLM-Powered Intelligence.” Together, they will talk about the rise of LLM-powered chatbots and how FRAG can help take their capabilities to the next level.