While technologies like generative AI create breathtaking visuals and remarkable virtual assistants, they also unleash some threats.

In fact, Geoffrey Hinton, one of the pioneers of deep learning and neural networks, recently resigned from his position at Google to focus on the dangers of generative AI.

This post will provide you with a bird’s-eye view of the risks associated with generative AI and ways to safeguard your business from them.

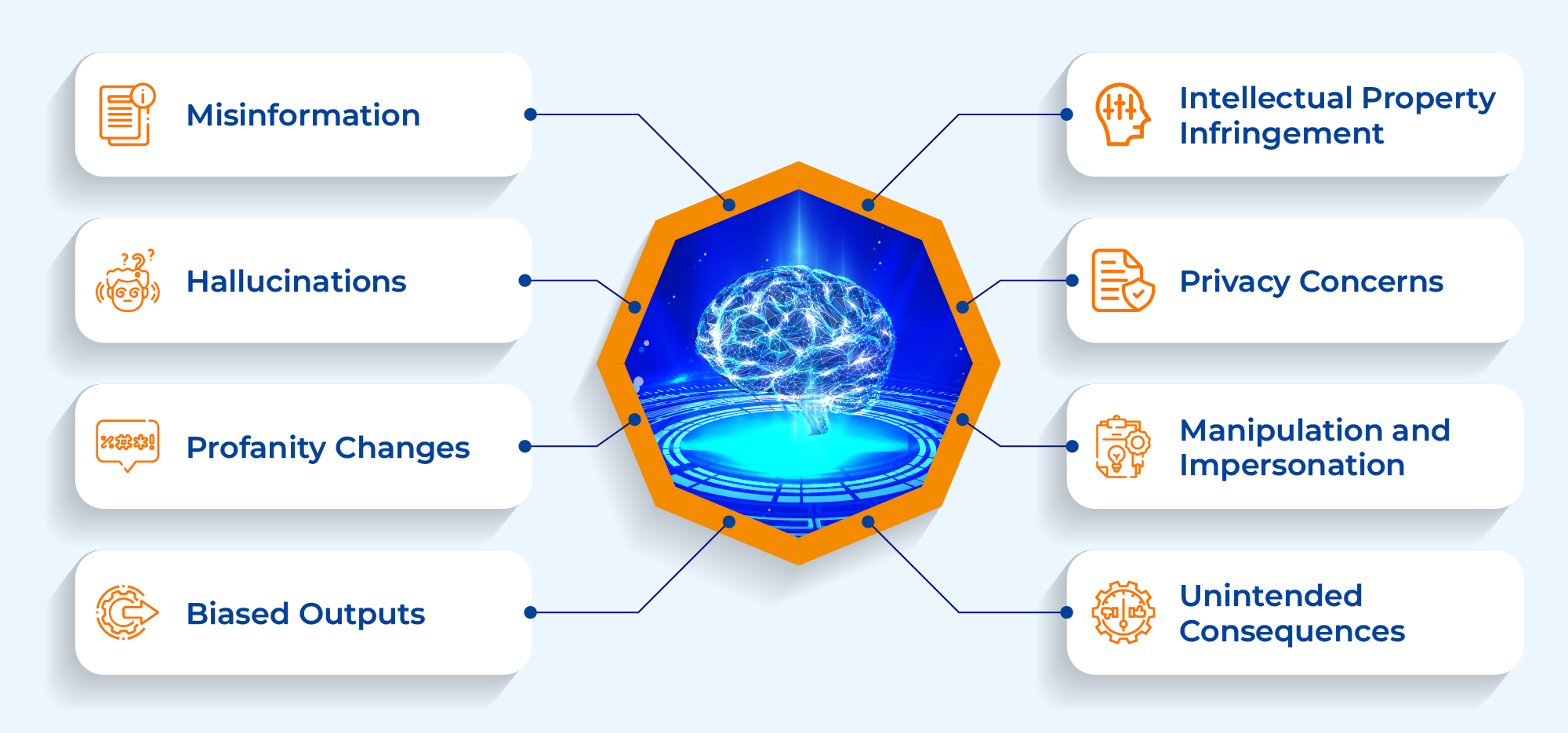

The Risks of Generative AI

- Misinformation: Generative AI models have the potential to generate misleading or false information, amplifying the spread of misinformation, fake news, and fabricated content.

- Hallucinations: Due to the complex nature of generative AI algorithms, there is a risk of hallucinations, where the model generates content that appears realistic but is not related to it by any means, leading to the dissemination of results. These hallucinations can range from generating fictional stories, images, or even entire scenarios that are convincingly realistic but are not factual at all.

- Profanity Changes: Generative AI models can unintentionally alter text or images to include offensive or inappropriate language or visuals, presenting challenges in maintaining content integrity.

- Biased Outputs: If not properly trained and calibrated, generative AI models can inadvertently reflect biases that perpetuate stereotypes, discrimination, etc.

- Intellectual Property Infringement: There is a risk that generative AI models can unintentionally generate content that infringes upon intellectual property rights, such as copyrighted material. This can lead to legal implications and disputes.

- Privacy Concerns: Generative AI models trained on personal data or sensitive information pose risks to individual privacy and personal security and may leak sensitive information.

- Manipulation and Impersonation: Malicious actors can exploit generative AI to manipulate the content or impersonate individuals, leading to identity theft, fraud, and more serious crimes.

- Unintended Consequences: The complexity of generative AI models makes it challenging to fully predict or control their behavior. That means unintended consequences can emerge from their outputs, potentially impacting social dynamics and trust in information.

Neutralizing the Perils of Generative AI

Now that we know about the risks associated with generative AI, let’s take a closer look at some effective ways to overcome them.

1. Automated Expert Auditing

It is a newer approach that leverages machine learning (ML) algorithms and other advanced technologies to identify potential issues with generative AI systems. This can be particularly useful for large-scale or complex systems that are difficult for humans to review manually. It typically involves training an ML model system on a set of predetermined criteria.

To ensure the accuracy and effectiveness of automated expert auditing, it is important to have a diverse and representative training dataset.

This is where Search analytics can help with granular reports like what users are searching for, which search queries are most popular, etc. By analyzing this data, one can identify potential issues with the generative AI system, such as biased search results or inaccuracies in the generated content.

Automated granular reports also enhance auditing capabilities by providing detailed reports on specific aspects of the system. For example, it can generate reports on the performance of individual algorithms or models, identify areas of the system that require improvement, and highlight potential sources of bias or inaccuracies.

2. Content Standard Checklist

One of the major challenges in content creation is ensuring that it is unique, relevant, and free from profanity and hallucinations. This is where Content Standard Checklist comes in handy.

It uses an advanced ML algorithm that assesses the quality of articles by taking into account various factors such as relevance, accuracy, completeness, tone, and sentiment to assign a score to each piece. If an article receives a low score, it can be flagged for review by a human moderator, who can then verify the piece and improve it. This is done using four parameters:

- Uniqueness

- Title Relevancy

- Link Validity

- Metadata

Want to know more about Content Standard Checklist? Give this post a read.

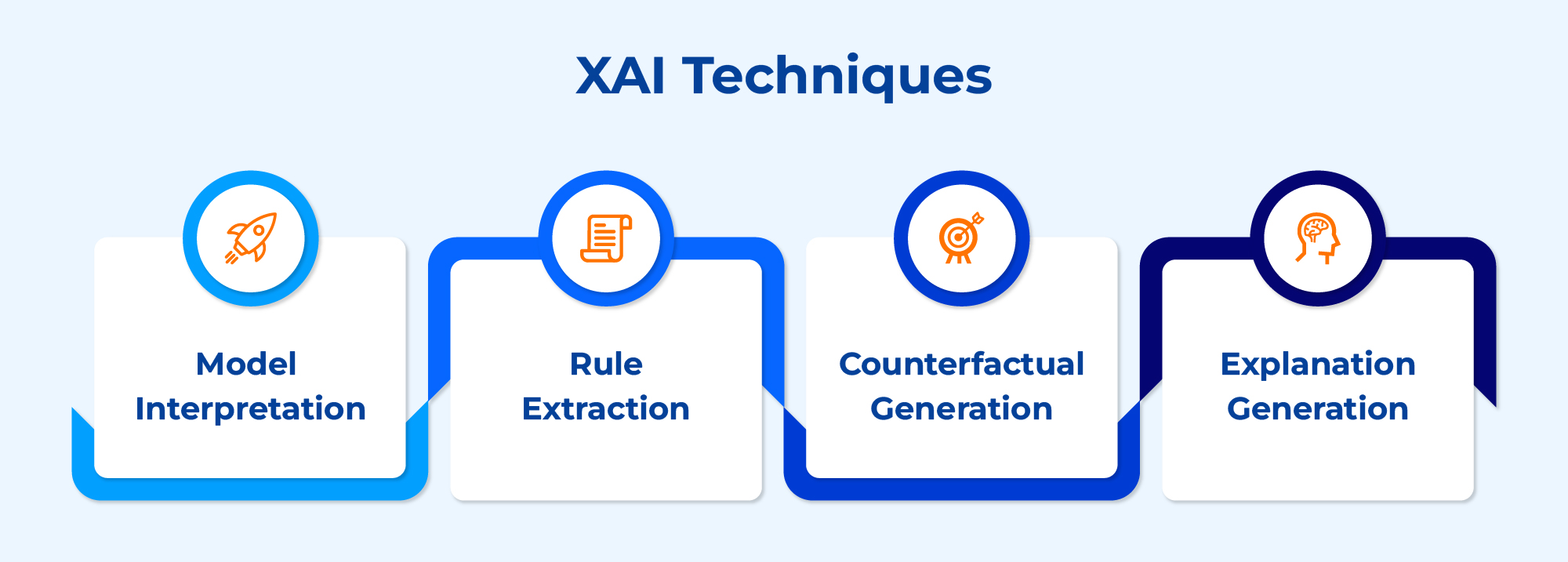

3. Explainable AI (XAI)

One of the main challenges with Generative AI systems is that they often operate as black boxes. This lack of transparency can make it challenging to identify and address issues with the system, which can lead to unintended consequences and even harm in some cases.

To address this challenge, XAI techniques aim to provide humans with a clear and interpretable understanding of how an AI system works. Some of the main techniques used in XAI include:

- Model Interpretation: This involves developing methods to understand how a model makes predictions, including which features are most important, how inputs are weighed, and how the model’s parameters are tuned.

- Rule Extraction: It focuses on distilling a set of interpretable rules or decision trees that capture the underlying logic of the AI system. These rules provide transparent explanations for the system’s behavior, making it easier for humans to understand and validate the model’s outputs.

- Counterfactual Generation: This technique involves generating alternative scenarios that would lead to different outcomes. By exploring these scenarios, stakeholders can better understand how the system works and identify potential issues.

- Explanation Generation: This is all about generating explanations of a model’s behavior in natural language. These explanations can be used to communicate how the system works to non-technical stakeholders, including end-users, regulators, and policymakers.

SearchUnify has an exciting tool instore for explainable AI—ML Workbench that allows users to test the impact of machine-learning algorithms on their ecosystem. It is designed to ensure that the ML algorithms used are transparent, explainable, and easily understandable to users. This helps users understand what goes under the hood of the system’s behavior and identify potential issues, ultimately leading to more effective and responsible use of Generative AI.

Final Verdict

Despite the risks associated with generative AI, enterprises should not hold back from exploring the technology. Having guardrails in advance can help organizations to accurately, easily, and securely leverage generative AI to elevate customer and employee experience.

SearchUnify helps make generative AI a seamless part of your workflows. How?

Request a demo now to know!