The movies “Her” and “Ex Machina” captivated us with the portrayal of artificial intelligence (AI) systems that possess a profound understanding of human language, engage in interactive conversations, and even form emotional connections. These films sparked discussions and raised thought-provoking questions about AI. However, with the changing times, Large Language Models (LLMs) are now gaining popularity and impacting various industries, including Enterprise Search.

Now, a new set of burning questions are making rounds: whether LLMs will render traditional enterprise search systems obsolete, leading to a potential technological revolution that transforms the way we search for information.

Let’s find out in this blog post where we will embark on exploring LLMs and their influence on enterprise search. The stage is set, and all eyes are focused on them as we delve into the exciting possibilities that lie ahead.

But First Things First—What are LLMs?

Large Language Models (LLMs) are powerful machine learning models (ML) that leverage deep learning algorithms to process and comprehend natural language. These models are trained on vast amounts of text data to extract patterns and understand the relationships between entities in the language. LLMs excel in a variety of language-related tasks, including language translation, sentiment analysis, chatbot interactions, and more. They possess the capability to grasp intricate textual information, identify entities, discern relationships between them, and generate coherent and grammatically accurate text.

Fascinating, isn’t it?

However, despite the remarkable advancements LLMs bring to language comprehension and conversational search experiences, there are several reasons why they cannot replace traditional enterprise search systems. Curious to find out what those reasons are? Continue reading to discover them.

Why LLMs Fall Short of Enterprise Search

1. Lack of Domain-specific knowledge

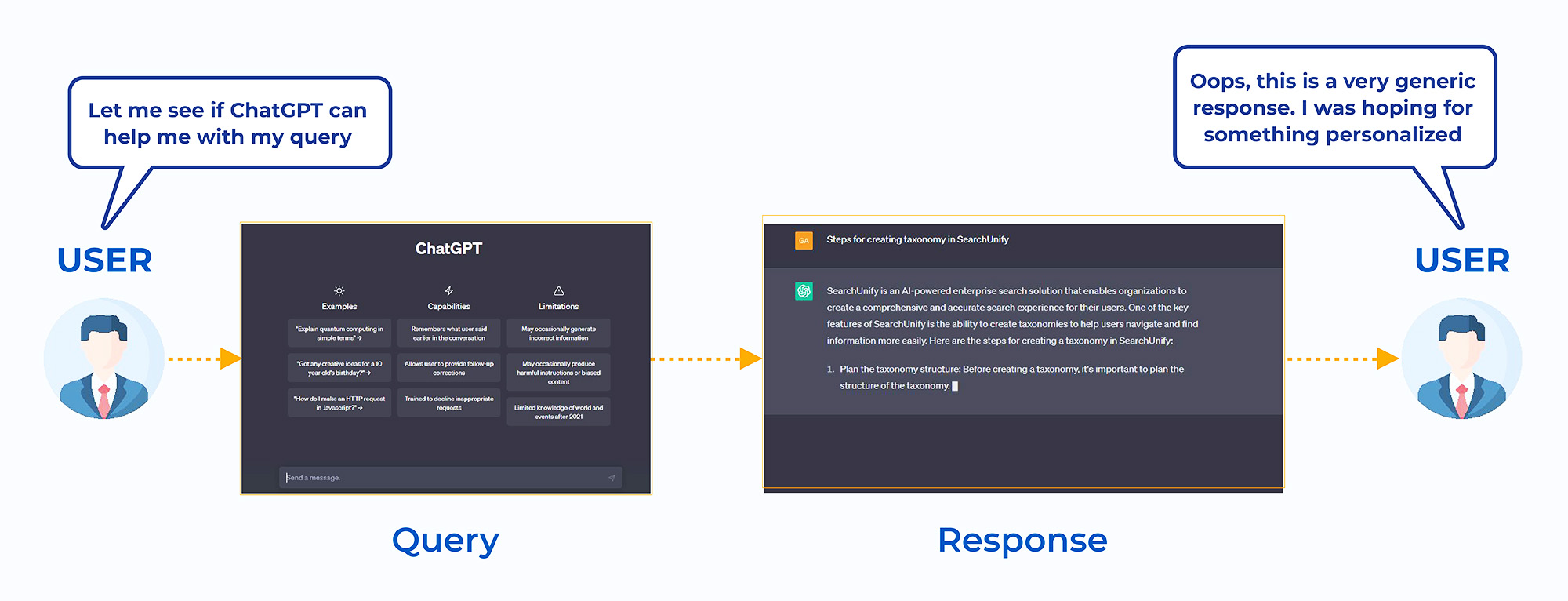

LLMs are trained on large, diverse datasets, which do not include domain-specific information relevant to a particular organization. On the other hand, enterprise search systems can be customized and tuned to specific domains, ensuring better retrieval of industry-specific information.

2. Limited Contextual Understanding

LLMs excel at understanding the context within a given conversation or query. However, they often struggle to interpret complex or ambiguous queries that require deep domain knowledge or contextual understanding. Enterprise search systems, with their structured search capabilities and metadata filtering, can provide more precise results in such scenarios.

3. Security and Privacy Concerns

LLMs, being trained on vast amounts of data, may inadvertently expose sensitive or confidential information. Therefore, organizations should carefully consider the security and privacy implications of using LLMs in their search systems. Traditional enterprise search systems, on the other hand, often have well-established security measures and access controls in place to protect sensitive data.

4. Customizations and Tuning Requirements

Enterprise search systems allow organizations to customize and tune the search experience to meet their specific requirements. They can define search rules, adjust relevance ranking, and incorporate domain-specific knowledge for better search results. LLMs, while powerful, may require additional efforts to be fine-tuned and customized.

5. Information Retrieval and Indexing Capabilities

Traditional enterprise search systems have mature indexing and retrieval mechanisms that efficiently handle large volumes of data. They can index structured and unstructured content, perform faceted searches, and support complex queries. LLMs, although proficient in language understanding, may not possess the same level of efficiency and scalability in information retrieval and indexing tasks.

In spite of all these discrepancies LLMs still stand tall and strong. In fact, their emergence heralds a transformative future for enterprise search. Let’s dive deeper.

Breaking Barriers: How LLMs are Propelling Enterprise Search into the Future

Looking ahead, LLMs hold promising prospects for future enhancements in enterprise search. Some of the key areas where LLMs can enhance the search experience include:

1. Offers an Intuitive Interface

LLMs have the potential to transform the search experience by offering conversational interactions and intuitive user interfaces. Users can engage in natural language conversations with the search system, leading to a more intuitive and efficient search process.

2. Solves Application Splintering and Digital Friction

With the proliferation of various enterprise applications, employees often struggle with information fragmentation and digital friction. LLMs can bridge these gaps by offering a search experience across multiple applications, reducing the need to switch between different systems and improving overall productivity.

3. Delivers More Relevant Results

LLMs have the ability to enhance search result relevance by considering the context and intent of the user’s query. This allows for more accurate retrieval of information and reduces the effort required to refine search queries, leading to increased efficiency and better user satisfaction.

4. Provides Direct Answers

Leveraging LLMs to improve question-answering capabilities within enterprise search can greatly benefit users. By understanding the context and semantics of questions, LLMs can generate more precise and helpful answers, streamlining the search process and providing users with quick access to relevant information.

5. Streamlines Unstructured Data

Unstructured data, such as documents, emails, and text files, often pose challenges for traditional search systems. LLMs excel in processing and understanding unstructured data, enabling more accurate and comprehensive search results within these data sets. This capability is particularly valuable in industries like legal, healthcare, and research, where unstructured data is abundant.

The Dynamic Duo: LLMs and Enterprise Search Working Hand in Hand

In conclusion, while LLMs may not completely replace traditional enterprise search systems, they offer significant enhancements that can revolutionize the search experience. By combining the strengths of both approaches and carefully addressing the limitations, organizations can create powerful and comprehensive search solutions.

So, organizations that embrace and leverage LLMs within their enterprise search systems will be well-positioned to stay ahead in an era where quick and accurate access to information is critical for success.

This is why SearchUnify’s LLM integration has been the talk of the tech world. Want to know more about it and explore SearchUnify specific LLM-infused products–SUVA and Knowbler?

Feel free to request a demo. Happy Discovering!