In a world increasingly driven by data and automation, Large Language Models (LLMs) have emerged as powerful tools with the potential to transform businesses across various sectors. OpenAITM ChatGPT and Anthropic’s Claude stand as prominent examples, endowed with the ability to generate human-like text and transform natural language processing (NLP).

But there is another side to the story. While LLMs offer remarkable capabilities, their implementation is not straightforward. Challenges spanning data security, ethical considerations, ongoing maintenance, and updates, among others, cast a significant shadow over the path to harnessing the full potential of LLMs.

To guide organizations through this intricate landscape, we introduce the 5C Consideration Framework—an invaluable compass for navigating the complexities of LLM usage for multiple domains. Moreover, we unveil an innovative solution that not only addresses these challenges but propels businesses toward unlocking the boundless possibilities that LLMs offer.

Let’s dive into the details!

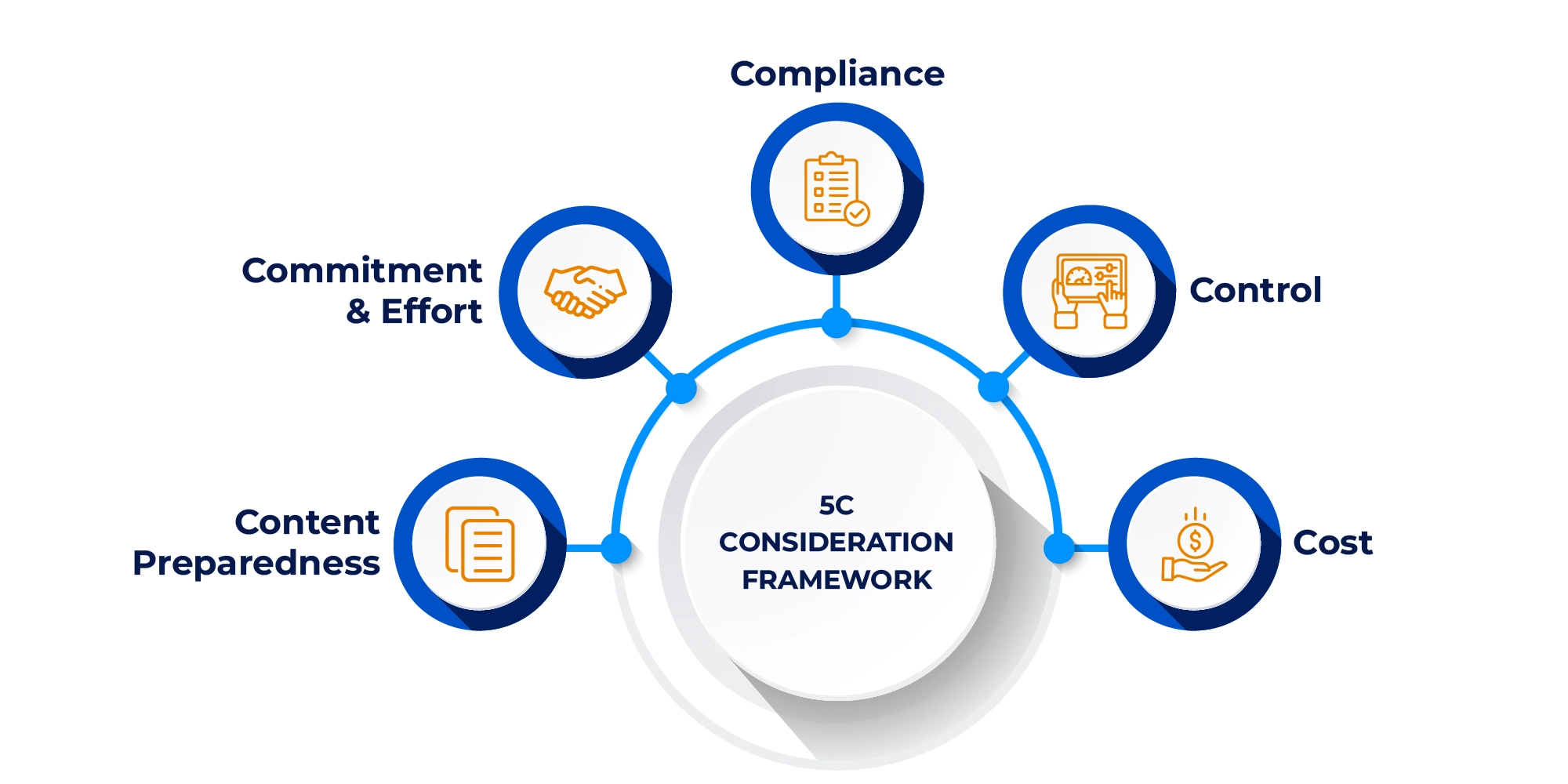

The Robust 5C Framework – Factors to Consider When Integrating LLMs

-

Compliance

LLMs have the potential to generate low-quality, biased, or deceptive content, raising concerns regarding misinformation, deep fakes, and malicious use. This makes compliance a critical aspect to consider when dealing with these models. Organizations must ensure that they adhere to data protection regulations and obtain necessary approvals for data usage before integrating them into their workflows.

For example, a healthcare company looking to implement LLMs for medical document summarization should comply with HIPAA regulations to protect patient data and ensure the ethical use of LLMs.

-

Control

When leveraging LLMs for business operations, it is crucial to consider the future direction of LLM service providers. Evaluate their roadmap and vision, as it can have a substantial impact on your organization’s long-term strategy.

Additionally, assess the level of downstream flexibility offered by LLMs and their support for edge conferences. This pertains to how well the model can function efficiently in decentralized or resource-constrained environments.

Lastly, determine the extent to which you can customize the LLM models to align with your unique business requirements. If not, fine-tuning might become necessary, which can be a costly and resource-intensive process.

-

Cost

Integrating LLMs can be a significant investment. Consider the expenses associated with software, hardware, and subject matter expertise required for implementation. It is also vital to calculate the total cost of ownership (TCO) and amortize it for each inference to gauge long-term financial implications accurately. That’s not all! upfront training and exploration costs are integral to the initial investment.

Such a cost-focused approach ensures businesses make informed decisions, optimizing the investment in LLM integration for sustainable benefits.

-

Content Preparedness

The efficiency of LLMs mainly relies on their ability to generate domain-specific content. Businesses must decide between fine-tuning and few-shot learning approaches to train their LLMs. The selected approach must best align with your goal of achieving task-specific data preparedness, empowering the model to seamlessly adapt to new tasks or domains.

Furthermore, make sure to train the LLM on industry-specific jargon, technical terminology, and specialized vocabulary that may be integral to your operations. For instance, a legal firm implementing LLMs for contract analysis should ensure that the model understands legal jargon and terminologies for accurate results.

-

Commitment and Effort

Integrating Large Language Models (LLMs) necessitates a careful consideration of the commitment and effort involved. This includes assessing the need for domain expertise in tasks like training and fine-tuning. It also encompasses the dedication required for hosting open-source LLMs, along with the effort needed for scaling integrations across various applications and industry-specific use cases. By understanding and planning for these aspects, organizations can ensure a focused and effective approach to LLM integration.

Enter SearchUnifyFRAGTM – The Innovative Solution for Effortless & Efficient LLM Optimization

Let’s accept it: Organizations worldwide are in a competitive race to adopt Large Language Models (LLMs) to streamline their operations. However, addressing the inherent limitations of LLMs requires a comprehensive solution.

While common approaches like RAG (Retrieval-Augmented Generation) fuel context building among these models and empower them to excel in domain-specific tasks, challenges like limited contextual understanding, dataset bias, inefficient information retrieval, etc., stand in the way.

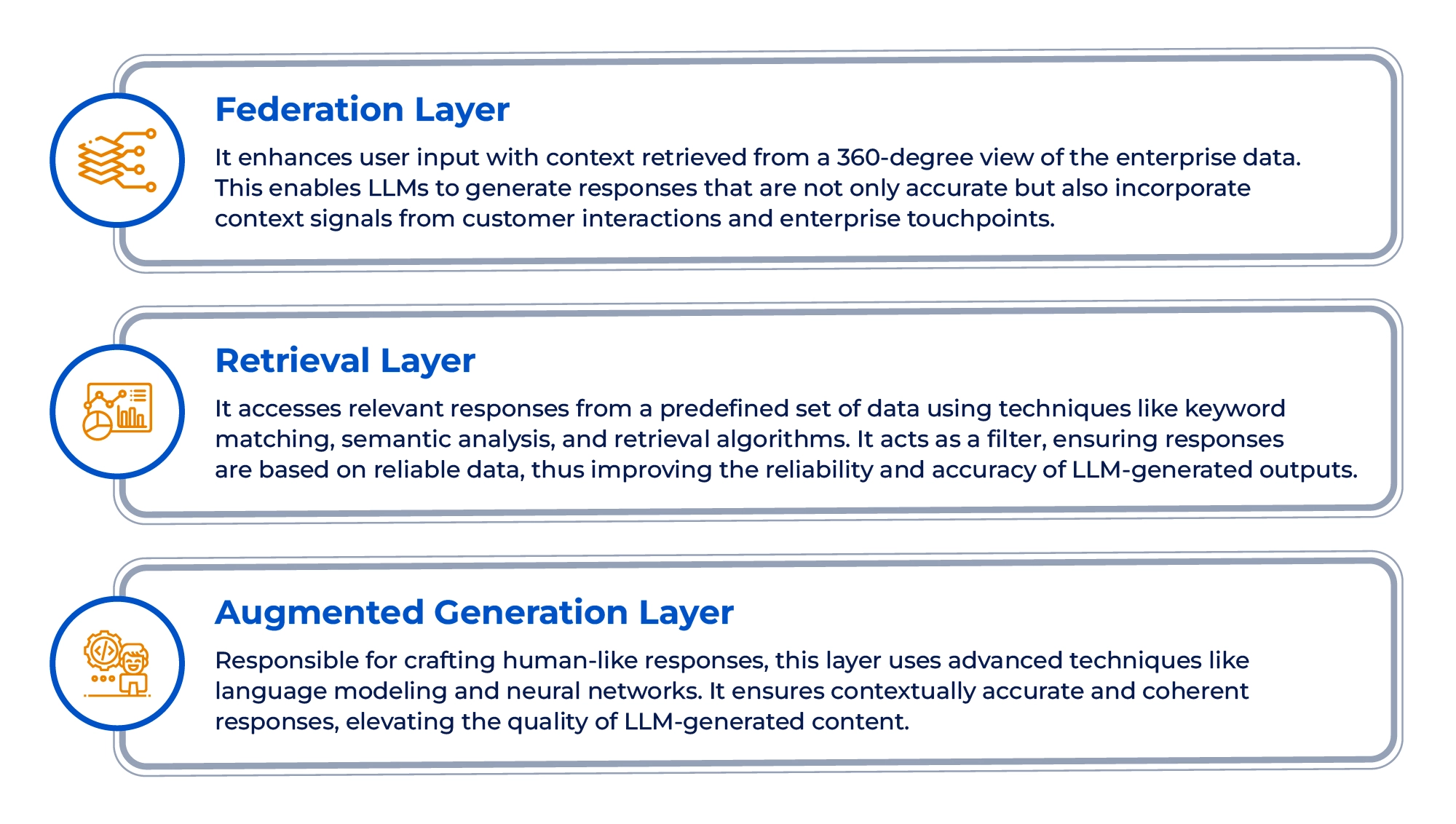

This is where the next-generation SearchUnifyFRAGTM comes into play! It is a groundbreaking framework that complements the 5C Consideration Framework and brings a new dimension to LLM integration. How you may ask?

It comprises three integral layers – Federation, Retrieval, and Augmented Generation – to elevate LLMs to new heights of context awareness, personalization, and efficiency. Refer to the image below for more details.

To learn more about SearchUnifyFRAGTM and how it works, read here.

Elevating Business Outcomes with Successful LLM Implementation – Your Journey Begins Now!

As LLMs continue to advance and become more powerful tools for businesses, the concerns regarding ethical implications and potential biases also grow. Fortunately, with the innovative solution, SearchUnifyFRAGTM, you can seamlessly harness the potential of these models while adhering to the principles of the 5C consideration framework.

If you are interested in witnessing the transformative power of SearchUnifyFRAGTM firsthand, request a live demo now.