In our previous blog post, “Prompt Engineering: Maximizing the Potential of Large Language Models,” we discovered how prompt engineering has gained significant attention as Large Language Models (LLMs) continue advancing the natural language processing (NLP) field. After all, it provides specific instructions or cues that effectively guide LLMs to produce coherent and contextual responses.

Two popular techniques—Zero Shot Prompting and Few Shot Prompting—have been making waves for their ability to leverage limited training data while achieving remarkable results.

Let’s delve deeper into these techniques, explore their advantages, and determine which one is best suited for enhancing the performance of language models in your business.

What is Zero-Shot Prompting?

Zero-shot prompting is a technique that allows a model to make predictions on unseen data without requiring additional training.

In prompt engineering, this technique empowers LLMs to perform tasks they haven’t been explicitly trained for. It works by providing the model with a prompt and a set of target labels or tasks, even without prior knowledge. The model then utilizes its pre-trained knowledge and reasoning abilities to generate relevant responses related to the given prompt.

Consider the following example:

In this prompt, the language model demonstrates its ability to generate a reasonable German translation, despite not being specifically trained in English-to-German translation examples. By leveraging its general understanding of both English and German language structures, vocabulary, and grammar, the model generates a translation that captures the intended meaning of the original English phrase.

Zero-shot prompting encompasses two ways for LLMs to generate responses:

1. Embedding-Based Approach

It focuses on the underlying meaning and semantic connections between the prompt and the generated text. By leveraging the semantic similarity between the prompt and the desired output, the model generates coherent and contextually relevant responses.

2. Generative Model-Based Approach

In this approach, the language model generates text by conditioning to both the prompt and the desired output. By providing explicit instructions and constraints, the model generates text that aligns with the desired task. This approach allows for more control and precision in the generated output.

Continuing our exploration, let’s now turn our attention to few shot prompting and its significance in prompt engineering.

What is Few Shot Prompting?

Few-shot prompting involves training LLMs on a small amount of task-specific data to fine-tune their performance.

Few-shot prompting allows a language model to adapt and generalize to a specific task by providing it with only a few labeled examples or demonstrations.

Suppose you have a large dataset of customer reviews for various products, and you want to train a language model to classify reviews as positive or negative. Instead of labeling thousands of reviews, you can use few-shot prompting to train the model with just a handful of labeled examples.

For instance, you provide the following labeled examples to the model:

Review: “I loved the new smartphone. The camera quality is amazing!”

Label: Positive

Review: “The customer service was terrible. I had a horrible experience.”

Label: Negative

By exposing the model to these few examples, it learns the patterns and features associated with positive and negative sentiments. Now, you can ask the model to classify new reviews, such as

Prompt: “What do you think of this product: ‘The battery life is exceptional, but the design is subpar.’ Is it positive or negative?”

Now that the model has learned from the few-shot examples, it can generate a response:

Output: “Based on the given statement, it seems to be a mixed review. The positive aspect is the exceptional battery life, while the negative aspect is the subpar design.”

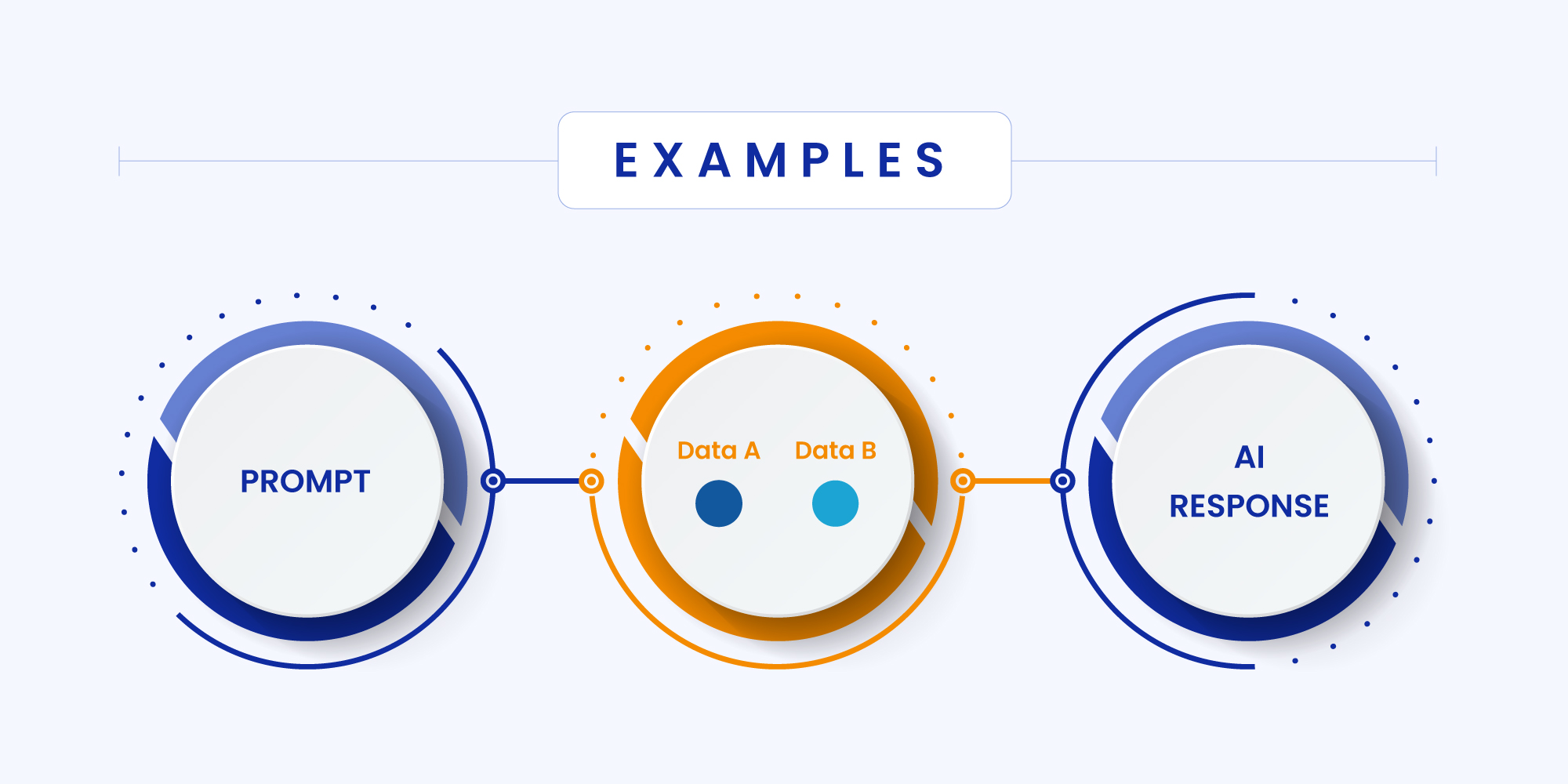

Few shot prompting encompasses two distinct ways to enable language models to adapt and perform tasks with minimal training data. These are:

1. Data-Level Approach

In this approach, the model is guided by a few examples of the desired input-output pairs. By exposing the model to limited task-specific data, it learns to generalize and generate appropriate responses.

2. Parameter-Level Approach

It refers to fine-tuning the model by adjusting its parameters based on a small amount of task-specific data.

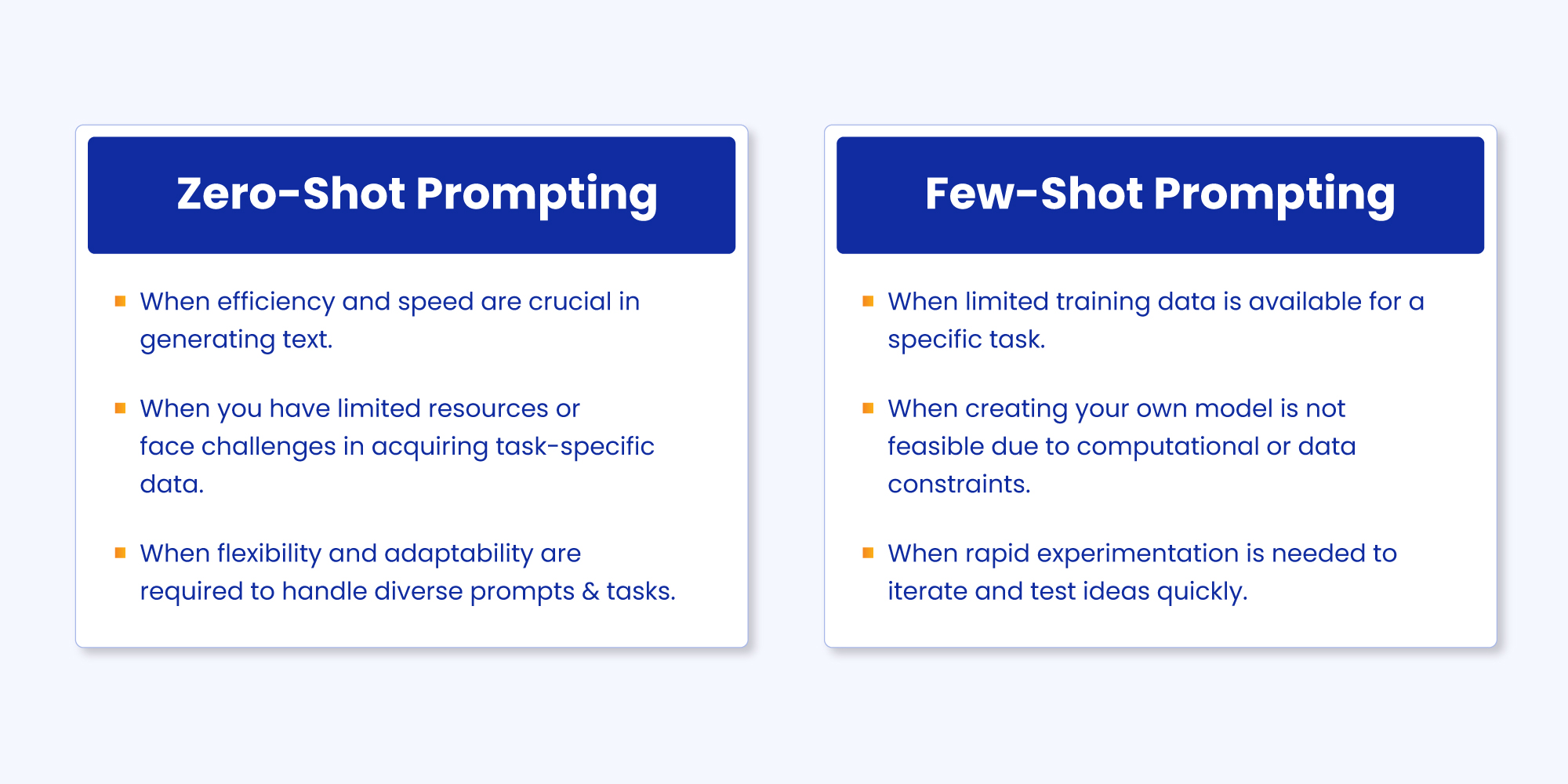

Zero-Shot or Few-Shot Prompting? Knowing When to Utilize Each Technique

As businesses embrace advanced language models to streamline their workflows, choosing the appropriate prompt engineering techniques becomes crucial. Let’s explore the situations where each technique shines:

How Zero-Shot and Few-Shot Techniques Transform Customer Experience

Both zero-shot and few-shot prompting offer immense potential for enhancing customer support operations with LLMs. Here’s a closer look at how they can be efficiently used:

Zero-Shot Prompting for Customer Support

- Multilingual Translation: By using zero-shot prompting for multilingual translation, agents can quickly and accurately translate customer queries and responses, enabling seamless communication. This helps in overcoming language barriers and ensures that customer support is accessible to a wider audience.

- Text Summarization: Customer support teams frequently handle lengthy documents, such as support articles or customer feedback. Zero-shot prompting can be a valuable solution here.By providing a document and a prompt like “Summarize the following text,” they can guide the model to generate a concise summary. This saves their time and helps them extract key information quickly. As a result, they can provide more relevant and faster resolutions to customer queries, ultimately improving the customer experience.

- Generative Question Answering: Zero-shot prompting can be used for generating abstractive or direct answers to customer queries without any specific training.Consider a user asking, “What is the capital of the USA?” By using pre-trained knowledge, LLM encodes the query, analyzes its meaning, and produces a concise and direct answer: “The capital of the USA is Washington, D.C.” The response precisely answers the user’s question, eliminating the need for searching through multiple search results or engaging in a back-and-forth conversation. This can help organizations reduce customer frustration and improve customer effort scores by several notches.

Few-Shot Prompting for Customer Support

- Sentiment Analysis: By fine-tuning the model with a small set of labeled examples of positive and negative sentiments, it can accurately classify sentiment in similar contexts. This allows customer support teams to comprehend customer emotions effectively, leading to more personalized and empathetic support.

- Named Entity Recognition: By providing labeled examples where named entities are identified, the model can be fine-tuned to recognize similar entities in other texts. This assists support teams in extracting important information like names, addresses, or product references from customer interactions, resulting in more efficient and accurate support.

- Knowledge Base Expansion: Few-shot prompting can assist in expanding and updating knowledge bases. To achieve this, organizations need to equip the language model with synthetic examples of new information or frequently asked questions. This enables the model to automate the process of capturing and organizing knowledge, ensuring that the knowledge base remains up-to-date and comprehensive for stellar customer support.

Level Up Your Customer Support With the Right Prompt Technique!

Advanced prompting techniques can significantly enhance the efficiency of interactions with customers. While zero-shot prompting drives faster responses, few-shot prompting paves the way for more accurate and relevant text generation. The choice between the two depends on your organization’s specific objectives and requirements.

For businesses that prioritize quick generation of text without the need for task-specific training, zero-shot prompting can be highly beneficial. On the other hand, if your organization deals with domain-specific or nuanced content, few-shot prompting is an ideal approach.

If you want to dig deeper into the concept of prompt engineering or witness its transformative impact firsthand, request a live demo today!