76% of enterprises are now prioritizing AI and ML over other initiatives in their IT budgets.

However, the diffusion of Artificial Intelligence (AI) does not necessarily translate into adopting it.

Imagine this: A company named ABC Corp. manufactures steel tubings that require working with potentially dangerous equipment. To increase the safety quotient and worker efficiency, the firm decided to build a high-accuracy AI model that would drastically reduce hazards by assisting frontline workers in making safe decisions. But upon revealing the model, meager adoption rates are witnessed.

Why, you may ask?

As AI and ML-powered systems continue to proliferate, it’s natural to wonder about the trustworthiness, privacy, and safety of these technologies. While their popularity is undeniable, the inner workings of ML algorithms remain shrouded in mystery, leaving us to wonder how they manage to deliver flawless results.

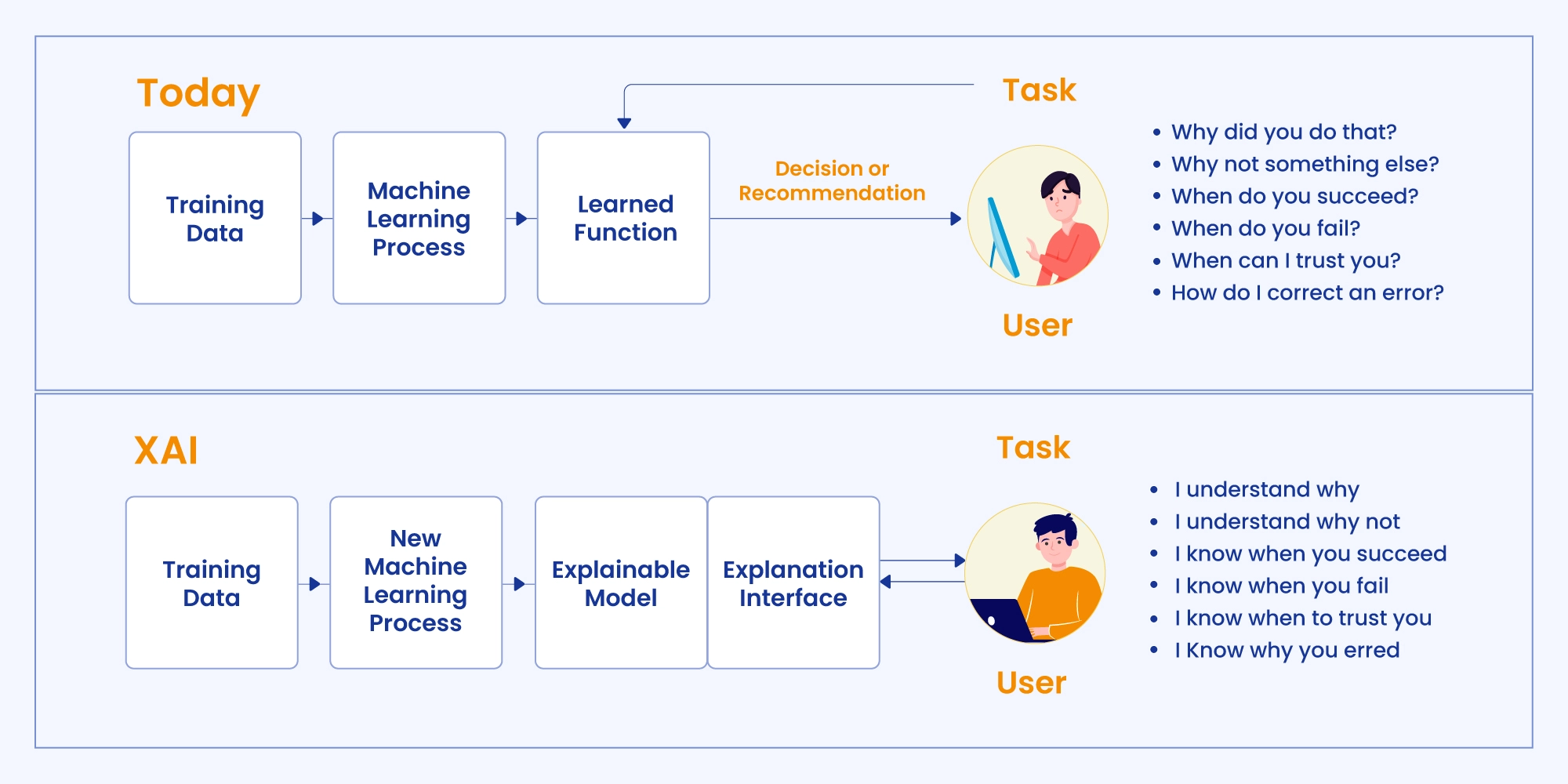

Often referred to as black boxes, most ML models are too complicated to comprehend. Understanding how they work is essential to building trust and confidence in ML systems.

In this blog post, we are going to talk about the essence of “explainability.” Let’s get started, shall we?

What is ML and AI Explainability (XAI)?

Explainable AI is a set of tools and frameworks that allows users to comprehend and interpret predictions made by machine learning models. With it, you can demystify the ML model and ascertain its impact along with potential biases, if any.

What is the need for AI Explainability?

To trust a technology, you need evidence of its accuracy and reliability. This is where explainable AI builds trust and confidence amongst users by ensuring that the system is working as expected.

Example 1: A bank uses an AI engine to accept or deny loans. Now, the applicants being denied the loan would seek an acceptable reason for the unfortunate outcome. On the other hand, bank officials need to confirm that the data being ingested into the AI systems isn’t biased against certain applicants.

Example 2: A CRM company uses an AI search engine to surface relevant results. Now, as a user, we naturally tend to ponder on why a particular result ranked on top while others dawdled away. On the other hand, the CRM employees would also need to confirm that the data being ingested into the AI enterprise search systems isn’t biased against certain results.

Since organizations are heavily relying on AI-based insights to make critical real-world choices, it is non-negotiable for these systems to be thoroughly vetted and developed using responsible AI principles.

IBM suggests that users of their XAI platform achieved a 15% to 30% rise in model accuracy and a 4.1 to 15.6 million dollar increase in profits.

Benefits of Explainable AI and ML Models

The benefits of being on the front foot for using explainable AI and ML models are galore, they are listed below:

1. Building Trust and Adoption

Only when users are certain that AI models are rendering consequential decisions in an accurate and fair way, will they see widespread adoption.

This is where demystifying Explainable AI and ML models can do the trick. They enable companies to master these five fundamentals—fairness, robustness, transparency, explainability, and privacy. Now that the organizations can explain the whys and hows of their ML algorithms, it will evoke user trust and lead to rampant adoption.

2. Minimizing Errors

The right set of explainability tools and techniques enables organizations to oversee the process and reduce the impact of erroneous results. It also allows the users to identify the root cause of problems, thus improving the underlying model. For instance, understanding the patterns that are used to deliver outcomes and how they do it would make it easier for the MLops team to continually evaluate and improve model performance.

3. Mitigating Regulatory and Other Risks

Since ML algorithms are not great at explaining their predictions, they are often referred to as black box models. If these predictions are implemented blindly in high-stake decisions like anti-money laundering and customer support calls, it could pose a critical risk.

But when explainable AI and ML come into the picture, technical teams can confirm the algorithms are not only in compliance with applicable laws and regulations but also aligned with your company’s policies and values.

The SearchUnify Approach To Explainable AI and ML

SearchUnify recently pulled up the curtains on its proprietary ML framework. Customers can now witness how our ML algorithms cast their magic to deliver inch-perfect results every time with our newly launched ML Workbench. Nifty, right? There’s more! In the coming release, we intend to push the envelope by completely handing over the reins of our ML systems to the customers. Doing so will allow them to alter the very core of our ML model, fitting their needs and preferences.