Revolutionizing Customer Support: Unleashing the Power of Large Language Models (LLMs)

A Deep Dive into SearchUnify's LLM-Infused Support Solutions

SearchUnifyGPTTM leverages the power of Large Language Models (LLMs), optimized by our proprietary SearchUnifyFRAGTM (Federated Retrieval Augmented Generation) framework, to bring direct, contextual, and personalized answers to support agents, right within their workflows, from across fragmented data silos. By deconstructing the user query, and leveraging semantic distillation of entities from text, SearchUnifyGPTTM infers query intent beyond keywords to generate coherent and contextual resolutions.

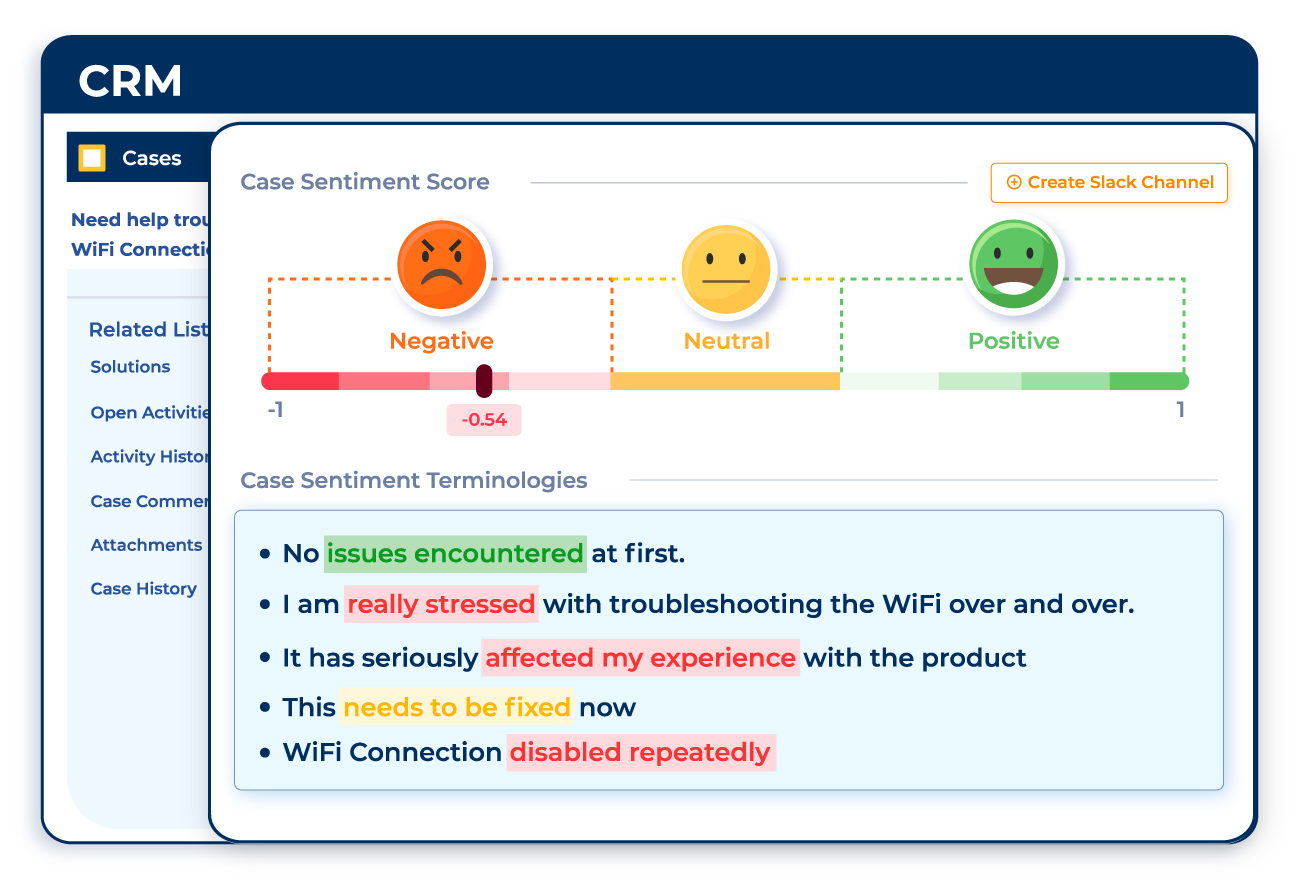

At SearchUnify, we leverage zero-shot technology to provide a model with a set of predefined entity labels or categories and utilize a prompt or a textual description of the task. This helps improve the accuracy and relevance of search results for agents and customers and contributes to a more accurate sentiment analysis.

Customer support agents typically spend 5–15 minutes writing notes when wrapping up each call or text chat, or when they transfer a case to the next level of support. LLM-fueled summarization fuels faster generation and summarization of issues and resolutions from a two-party conversation, especially between a customer and an agent, which can greatly reduce case handling time, increase agents’ job satisfaction, sustain high customer engagement, improve customer experiences, and as a result boost customer loyalty.

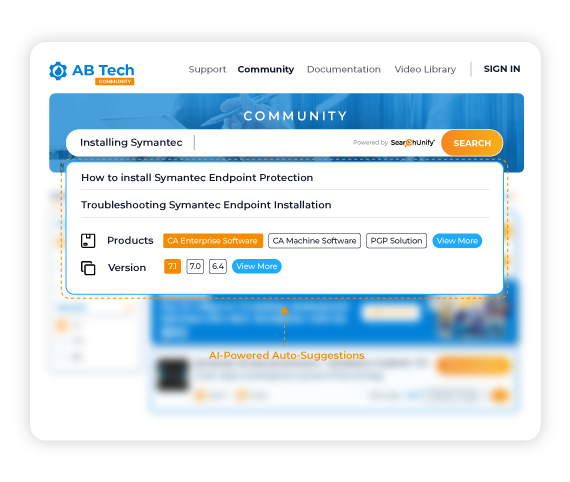

Based on the user’s input, search history, search query popularity, temporal factors, and user interest, LLM-fueled intelligent auto-suggestions help reduce customer self-service effort, fasten resolution time, improve search efficiency, and allow users who might struggle to describe the information they need accurately.

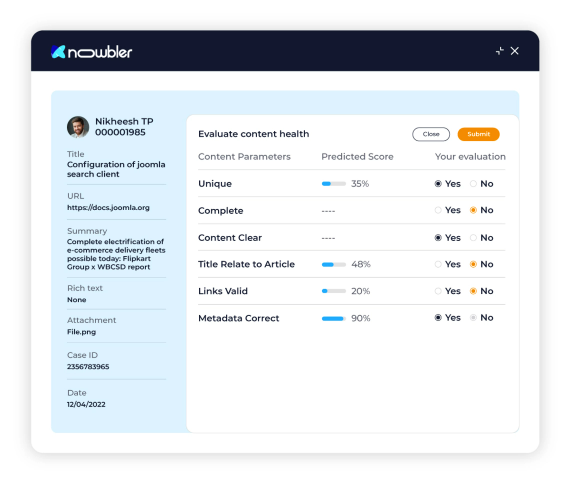

LLMs alone work incredibly well but struggle with more niche or specific questions. This often leads to hallucinations that are rarely obvious and likely to go undetected by system users. Due to this, you will have to validate whether the links being provided by the model are relevant or not. That’s where Knowbler’s ML-powered Article Quality Index(AQI) check ensures the links are not profane and the sources are real.

LLM-powered Knowbler auto-generates titles and summarizes the case resolution into KCS-defined templates to reduce the friction for service agents looking to create new knowledge and streamline knowledge article creation for known issues.

Sentiment analysis often requires detecting nuances and subtle variations in sentiment. Large language models can capture fine-grained sentiment by analyzing the semantic meaning of words and phrases, their relationships, and the overall tone of the text. This not only helps in identifying the positive or negative sentiment but also gauges sentiment strength and polarity.

SearchUnify Virtual Assistant (SUVA) is the world’s first federated, information retrieval-augmented virtual assistant for fine-tuned, contextual, and intent-driven conversational experiences at scale. Powered by the innovative SearchUnifyFRAG™, it generates relevant and precise responses while addressing the challenges of LLMs.