Ever typed a search query and gotten results that felt like a guessing game? Frustrating, isn’t it? Traditional search engines often struggle with complex or ambiguous queries.

But what if your enterprise search engine could understand you like a human colleague? Enter BERT, a revolutionary language model that’s transforming enterprise search. It goes beyond basic keyword matching to analyze the entire sentence, also considering how each word connects to its neighbors.

For instance, “For example, searching for “time flies” might lead a traditional engine astray. But BERT, understanding the context of “fun” alongside “time,” recognizes you’re looking for information about time passing quickly.” This allows the framework to create a more accurate representation and truly understand the sentence’s meaning beyond the surface level.

BERT: Decoding Your Searches Like a Pro

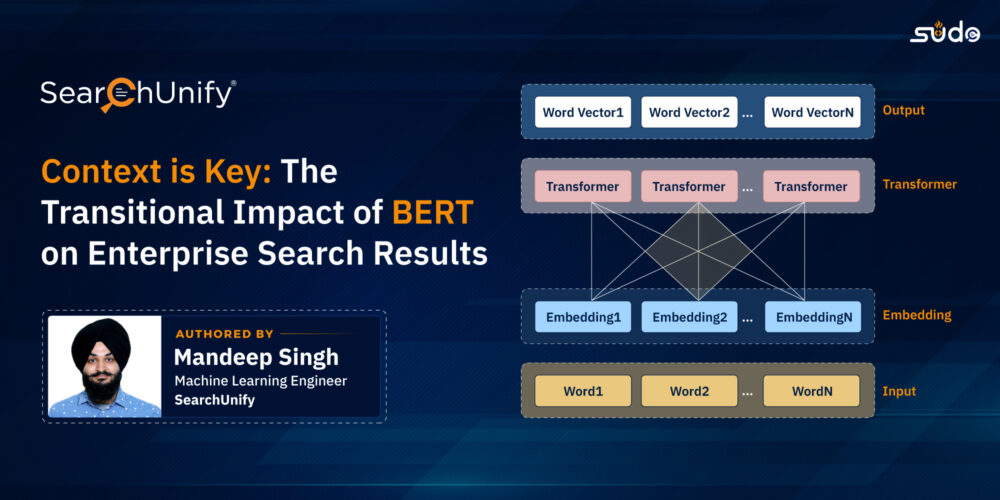

Short for Bidirectional Encoder Representations from Transformers, BERT, is an open-source machine learning framework to understand natural language better. It can be broken down into the following key components:

- Bidirectional: Unlike previous models that only considered words in one direction (left to right or right to left), this framework can analyze the context of a word by looking at both its preceding and following words. This allows it to better understand the meaning and intent of a sentence.

- Encoder: The “encoder” part converts text into a numerical representation, thus enabling machines to understand it. The model uses a specific type of encoder called a transformer, which excels at capturing relationships between words.

- Representations: These are the numerical codes that represent the meaning of words and sentences within a text. BERT creates these representations by considering both the context and the intrinsic meaning of each word.

- Transformers: BERT uses a specific type of neural network architecture called a transformer. Transformers excel at identifying relationships between words, allowing BERT to grasp complex sentence structures and subtle nuances of language.

How Does BERT Work Its Magic?

Given below are key features that enable BERT to fully understand human language:

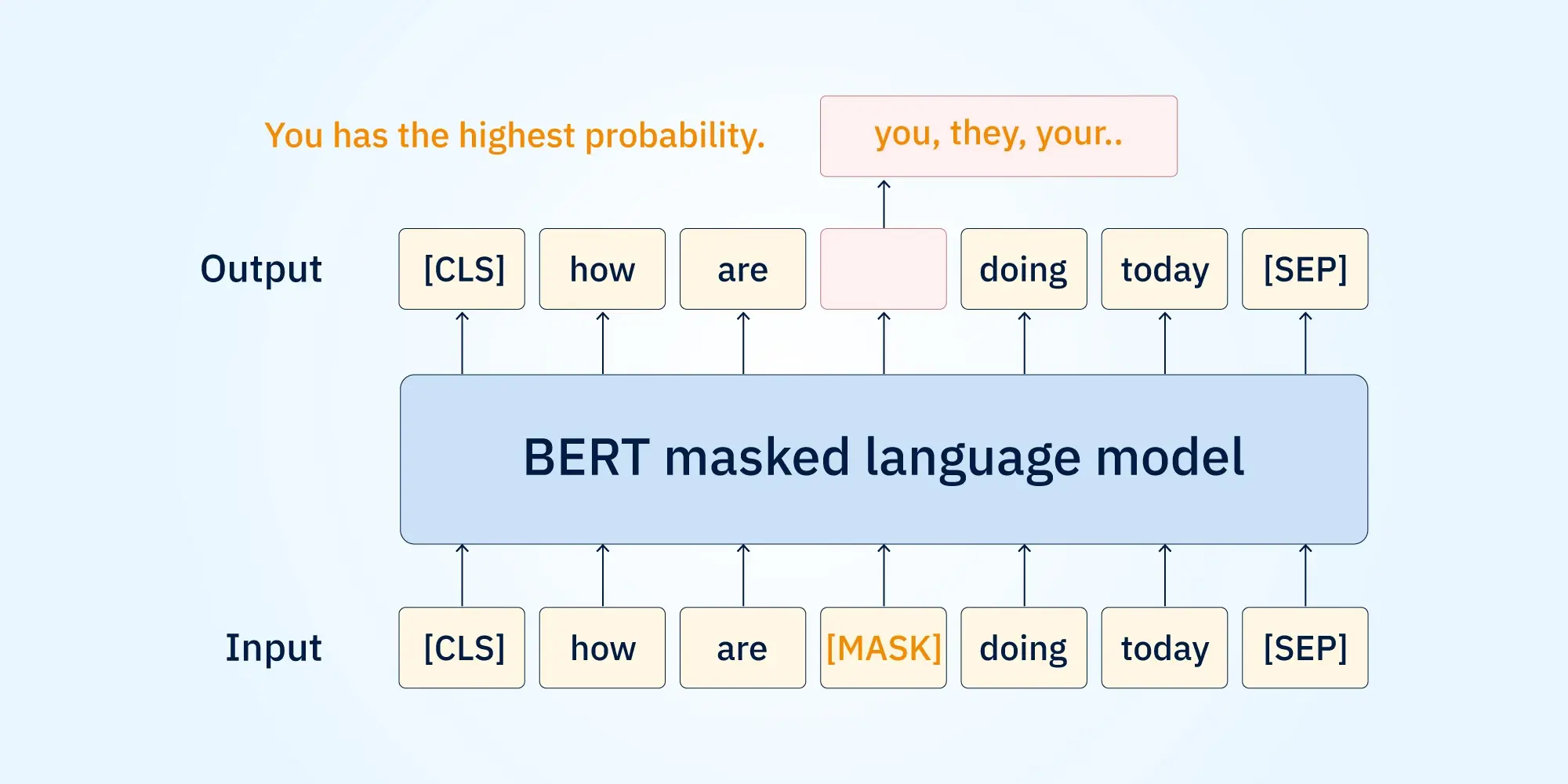

- Masked Language Modeling: During training, the BERT framework is presented with sentences where some words are masked out. The framework gets to work and identifies and/or predicts the masked words by considering the surrounding words and their context. This process helps it to develop a deep understanding of word relationships and how words function within a sentence.

- Fine-tuning for Specific Tasks: Although, BERT is a pre-trained model where it already has some general language understanding, its true power lies in its ability to be fine-tuned for specific tasks. This way support organizations can adjust the model’s parameters to excel at particular domains, such as customer sentiment analysis, question answering, or text summarization.

- Attention Mechanism: This mechanism allows the framework to focus on the most relevant parts of a sentence when making predictions. Imagine you’re reading a sentence with several clauses. The attention mechanism helps it to concentrate on the clause most relevant to the task, improving its accuracy and efficiency.

- Contextual Word Embeddings: BERT doesn’t simply assign a fixed meaning to each word. It generates dynamic word embeddings that change depending on the context in which the word appears. This allows BERT to capture the subtle differences in meaning that words can have depending on their surroundings.

- Transfer Learning: Due to its pre-trained nature, this framework can be easily applied to various tasks without requiring massive amounts of data for each specific application. This saves time and resources, making it a powerful tool for various NLP applications.

Finding the right search solution for your business?

Talk to our expertsBERT’s Superpowers: Understanding Different Use Cases Better

The impact of using BERT has been explained below:

- Contextual Understanding: As explained above, the model demystifies the context and relationships between words in a sentence. Doing so, allows enterprise search engines to interpret user queries more accurately, even those with various meanings.

- Customer Feedback Analysis: The framework is adept at analyzing customer reviews and detecting dissatisfaction from social media comments or mentions. Support organizations can leverage this framework to gain valuable insights into customer sentiment, identify areas for improvement, and enhance overall customer experience.

- Chatbots and Virtual Assistants: Equipping chatbots and virtual assistants with BERT’s capabilities ensures users engage in more natural and humanized conversations. Additionally, with BERT, chatbots and virtual assistants can better understand user requests, therefore providing more helpful and personalized responses.

- Content Creation: The model can assist in analyzing user preferences and trends, enabling the creation of content that resonates better with target audiences. Additionally, it can be used to optimize marketing campaigns by understanding the sentiment and intent behind user interactions.

Ushering In a New Era of Delivering Relevant Results

So, the next time you type a search query, there’s a high possibility that BERT might be working behind to ensure you find exactly what you need.

Intriguing, isn’t it? Want to know how SearchUnify leverages BERT to turbocharge its enterprise search engine’s ability to deliver relevant results and bridge the gap between humans and machines? Then request a demo, right away!